How to analyze and interpret A/B testing results

Analyzing A/B testing results is one of the most important stages of an experiment. But it's also the least talked about. Here's how to do it right.

Summarize this articleHere’s what you need to know:

- Analyzing A/B testing results is crucial for understanding which variation to adopt or if adjustments are needed.

- Two key metrics to consider are Uplift, the performance difference between a variation and the control group, and Probability to Be Best, the chance of a variation having the best long-term performance.

- Analyzing results by audience can reveal hidden opportunities, as different groups might behave unexpectedly in the test.

- Beyond Uplift and Probability to Be Best, consider other metrics like conversion rate, engagement, and revenue to gain a comprehensive understanding.

- Don’t stop at statistical significance; look for practical implications and the potential impact of changes.

- Remember, A/B testing is an iterative process; use your findings to inform future tests and refine your optimization strategy.

Most experimentation platforms have built-in analytics to track all relevant metrics and KPIs. But before analyzing an A/B test report, it is important that you understand the following two important metrics.

- Uplift: The difference between the performance of a variation and the performance of a baseline variation (usually the control group). For example, if one variation has a revenue per user of $5, and the control has a revenue per user of $4, the uplift is 25%.

- Probability to Be Best: The chance of a variation to have the best performance in the long term. This is the most actionable metric in the report, used to define the winner of A/B tests. Whereas uplift may vary based on chance for small sample sizes, the probability to be best takes sample size into account (based on the Bayesian approach). The probability to be best does not begin calculating until there have been 30 conversions or 1,000 samples. To say it simply, the Probability to Be Best answers the question “Who is better?”, and uplift answers the question “By how much?”

Basic analysis

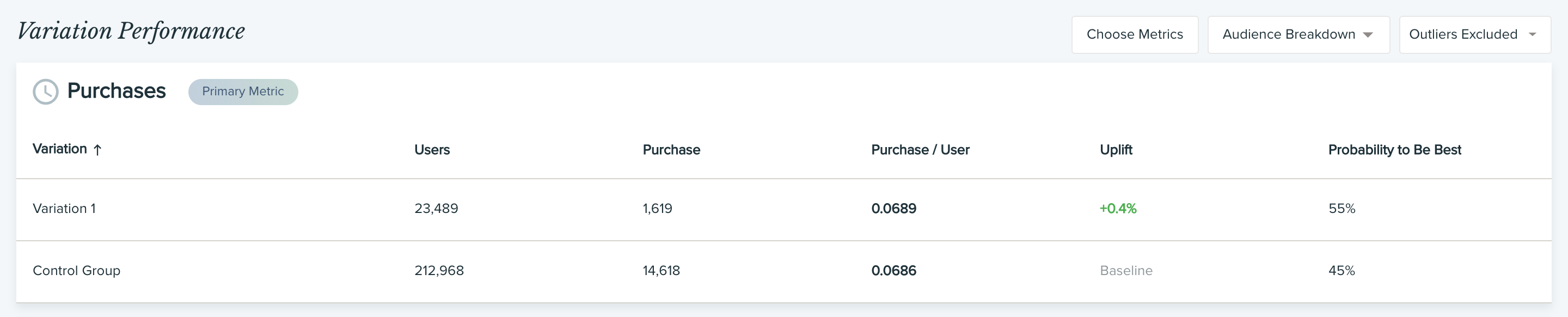

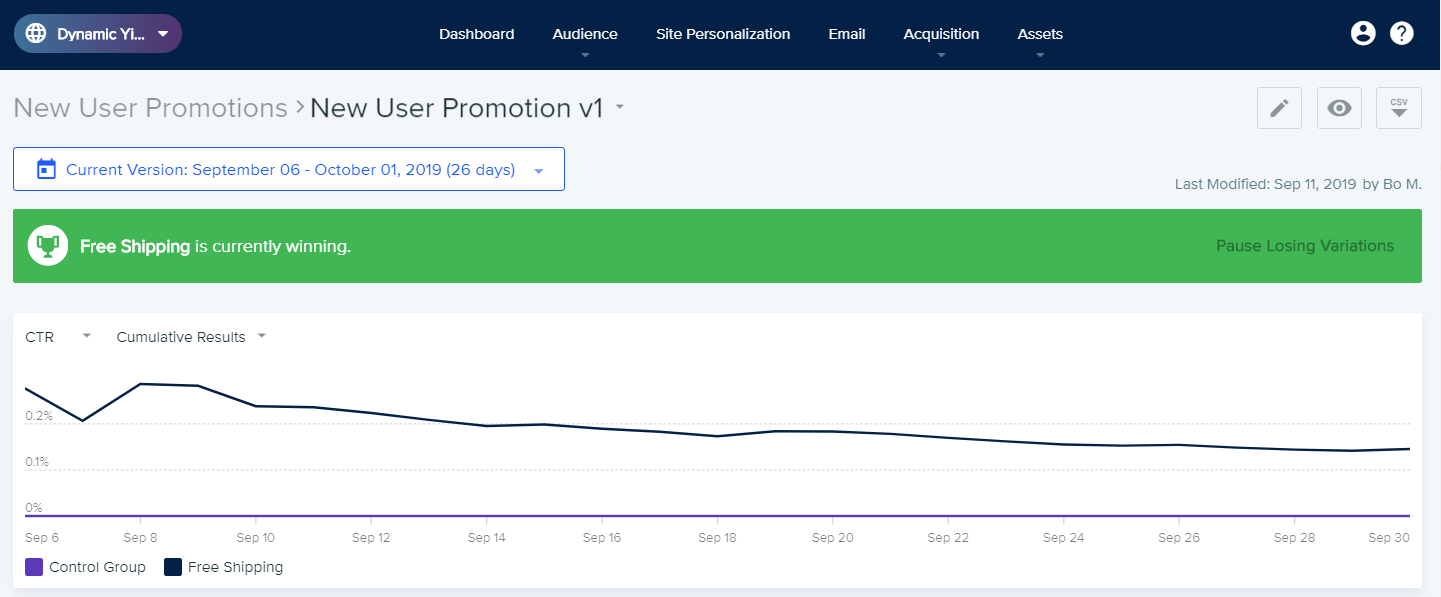

Start by checking the A/B test results to see if a winner has been declared, or for other information such as which variation is currently winning. If your experimentation does not provide the Probability to Be Best metric, use our Bayesian A/B Testing Calculator to examine the data and look for statistically significant results.

Typically, a winner will be declared if the following conditions are met:

- One variations has a Probability to Be Best score above 95% (the threshold can be changed in some platforms using the winner significance level setting).

- The minimum test duration has passed (default is 2 weeks). This is designed to make sure the results are not affected by seasonality.

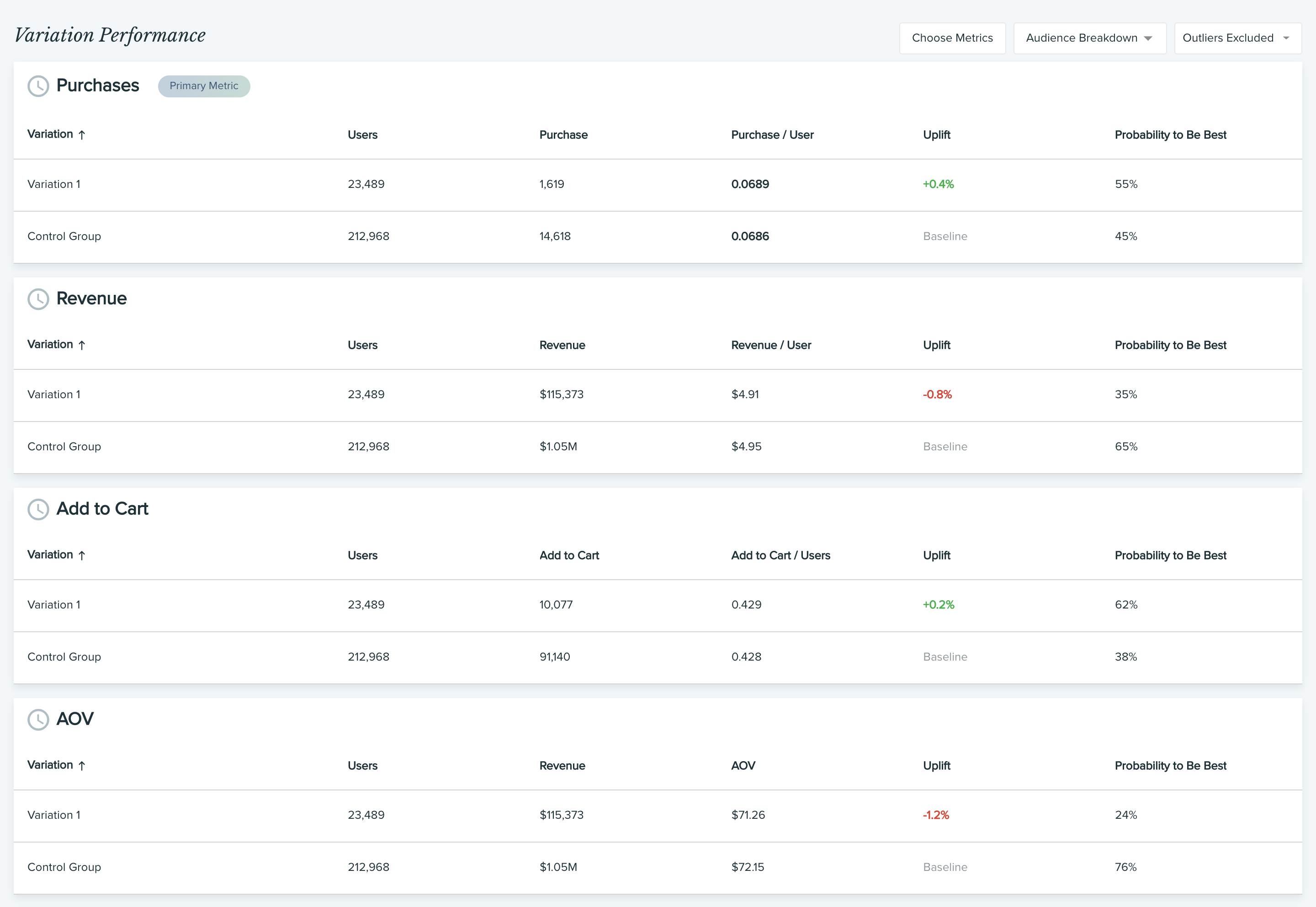

Secondary metrics analysis

While the winners of each test are based on the primary metric, some experimentation platforms like Dynamic Yield also measure additional metrics called secondary metrics. We recommend analyzing secondary metrics before concluding the experiment and applying the winning variation to all users for a few reasons:

- It can save you from making a mistake (e.g. Your primary metric is CTR, but the winning variation may reduces purchases, revenue, or AOV).

- It can lead to interesting insights (e.g. purchases per user dropped, but AOV increased, meaning the variation led to users purchasing less, but more expensive products and overall more revenue).

For each secondary metric, we suggest looking at the uplift and Probability to Be Best scores to see how each variation performed.

After your analysis, you can determine if you should serve all of your traffic with the winning variation, or adjust your allocation based on what you have learned.

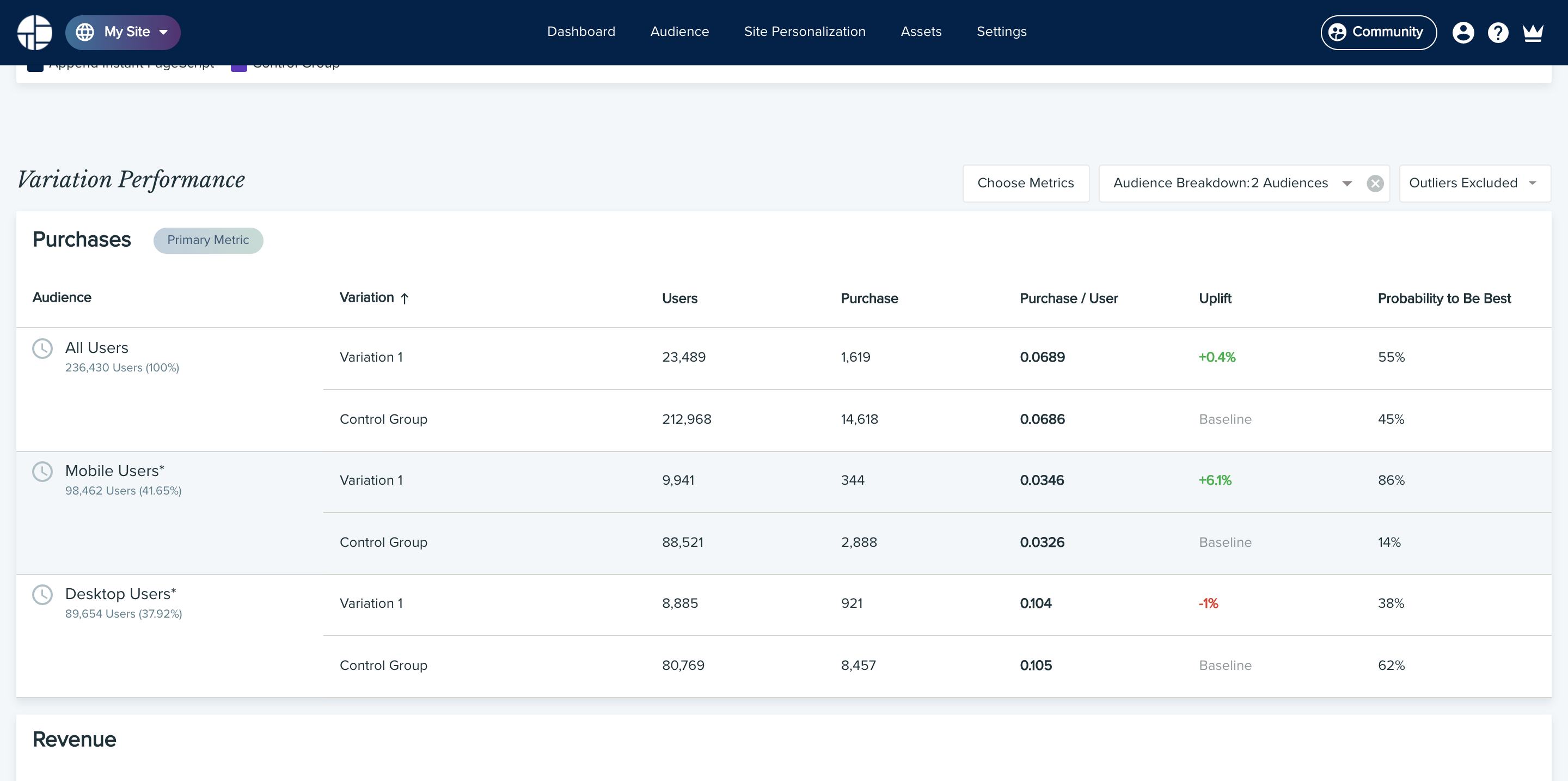

Audience breakdown analysis

Another good way to dig deeper is to break your results down by audience. This can help answer questions such as:

- How did traffic from different sources behave in the test?

- Which variation won for mobile and which for desktop?

- Which variation was most effective for new users?

I recommend selecting audiences that are meaningful to your business, as well as audiences that are likely to have different user behaviors and intent signals.

Once again, for each audience, look at the uplift and Probability to Be Best scores to see how each variation performed. After your analysis, you can determine if you should serve all of your traffic with the winning variation, or adjust your allocation based on what you have learned.

But sometimes, losing A/B tests are actually winning!

There’s no doubt that CRO and A/B testing works, long relied on for producing massive uplifts in conversion and revenue for those who properly identify the best possible variation for site visitors. However, the truth is that as delivering personalized interactions becomes more intrinsic to the customer experience, experiments that don’t take into consideration the unique conditions of individual audiences will end up with inconclusive results and statistical significance will become harder to achieve.

In this complex new world of personalization where one-to-one supersedes a one-to-many approach, “average users” can no longer speak for “all visitors.” That means previous ways of uncovering and serving the best experiences won’t suffice anymore, despite following best practices around sample size creation, hypotheses development, and KPI optimization.

Today, instead of accepting test results at face value and deploying variations expected to perform best overall for all users, marketers must understand that in doing so, they’ll be compromising the experience for another portion of visitors. Because there will always be segments of visitors for whom the winning variation is not optimal. Only after recognizing this flaw in test analysis and execution does it become clear that losing A/B tests can actually end up as winners, and that hidden opportunities once thought meaningless may actually bare the most fruit through a modernized, personal way of thinking.

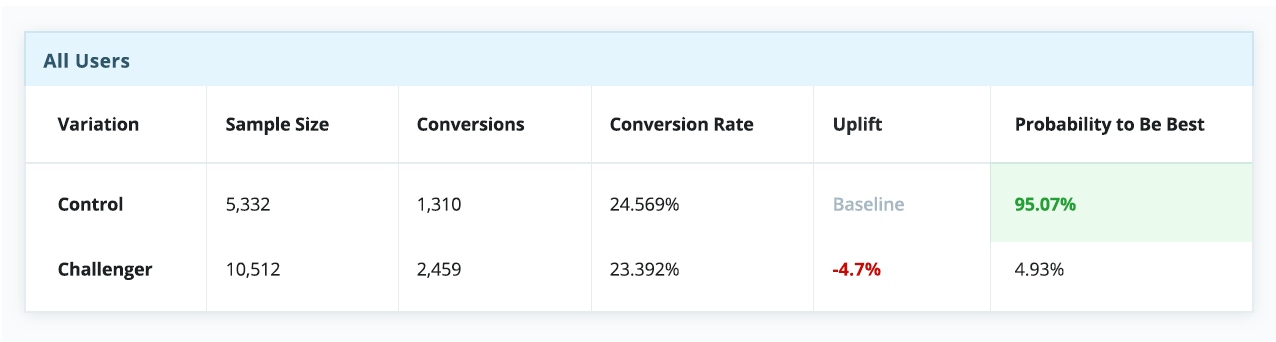

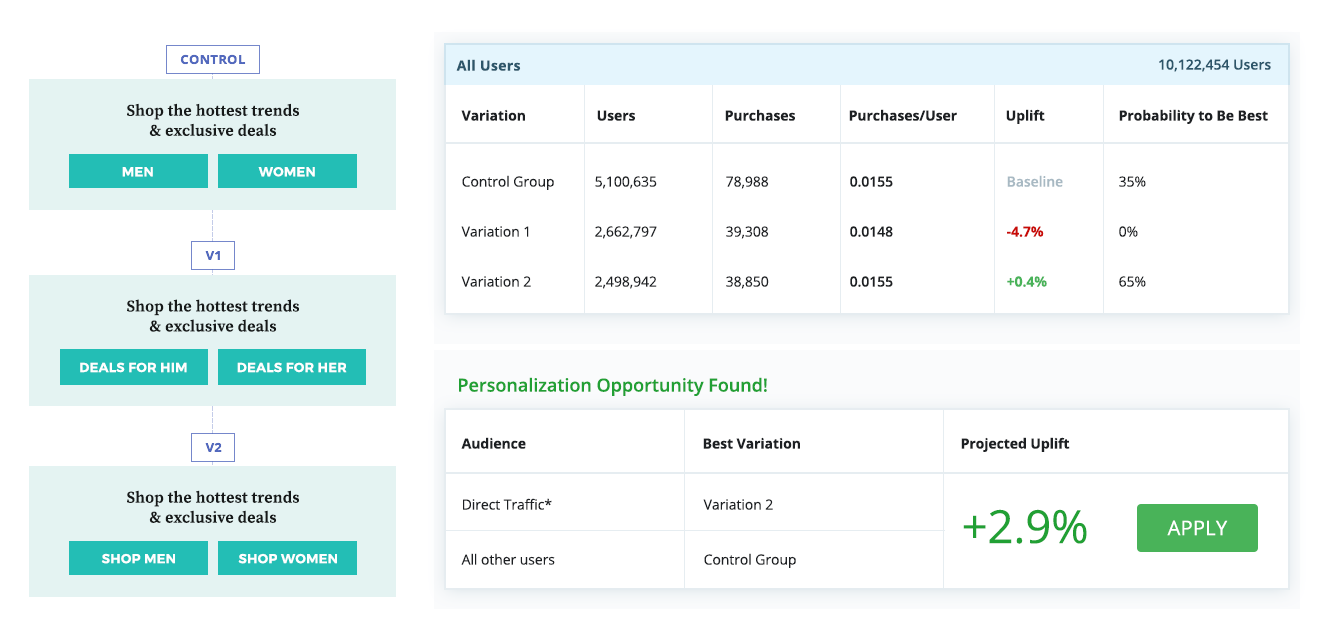

In the example below, find real results from an experiment which was live for about 30 days. Almost in a draw, at first glance, it appears as though the control is outperforming the challenger.

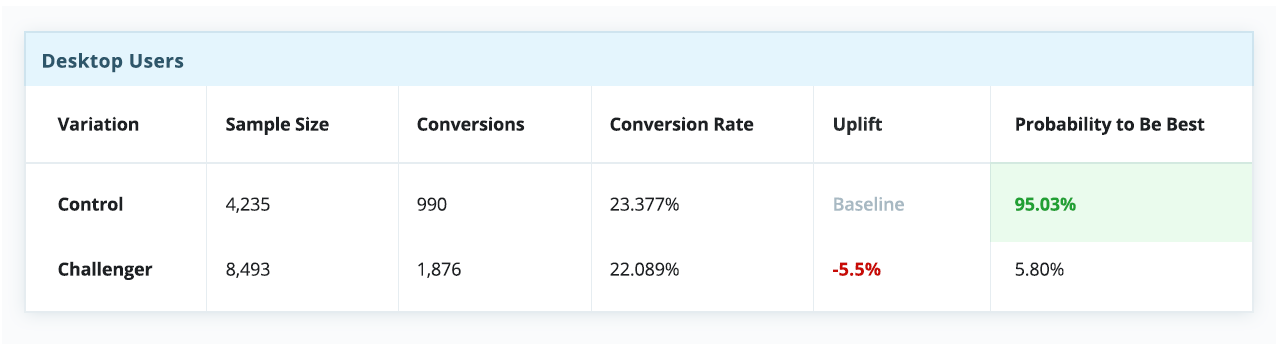

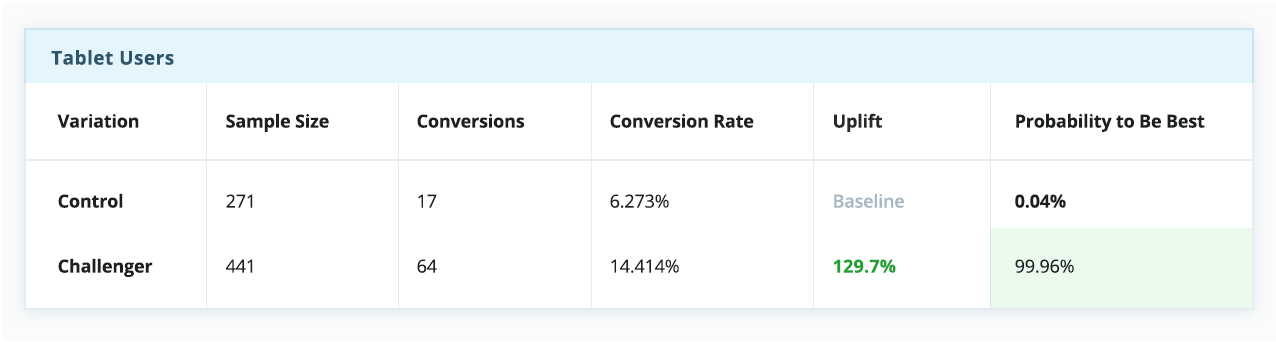

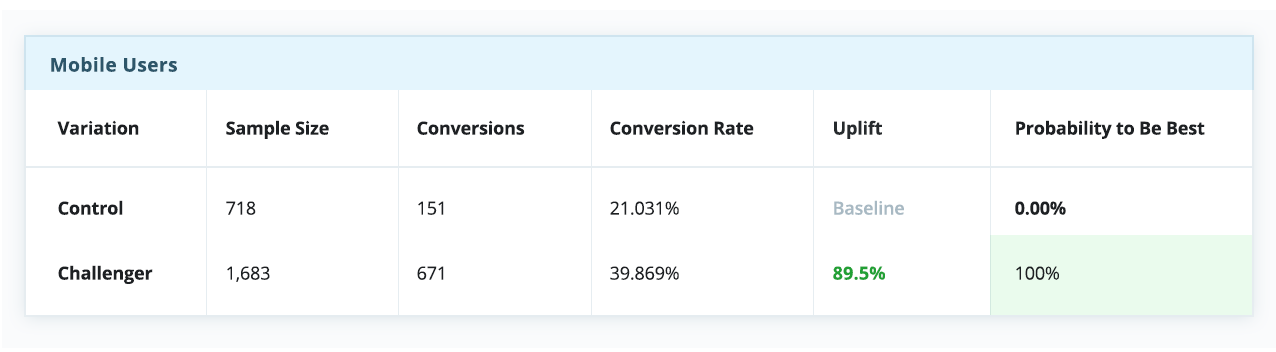

However, when breaking down the experiment report by devices, a relatively basic and straightforward measure, there’s a completely different story, indicating the control is a winner on desktop, but is dramatically outperformed by the challenger on both tablet and mobile.

In the above testing scenario, had a “winner takes all” strategy been set (which many faithfully do), the entire visitor pool would have unknowingly been served with a compromised experience that is “optimized” for an average user, despite a clear preference for the challenger across mobile web and tablet.

In another example, while the control group proves subpar on average, further analysis concludes it should, in fact, still be allocated to “All other users,” leaving the much larger “Direct Traffic” segment to receive its preference, variation two.

These references illustrate the importance of discovering the impact of test actions on different audience groups when running experiments, no matter how the segment is defined. Only after taking the time to thoroughly analyze the results of one’s experiments for different segments can deeper optimization opportunities be identified, even for tests that are failing to produce uplifts for the average user (which doesn’t actually exist).

While some of these scenarios won’t require more than a quick analysis on the part of a CRO Specialist, with busy testing cycles and constantly changing priorities, carving out the time to do so doesn’t always happen. This problem only becomes exasperated as the number of tests, variations, and segments increases, making analysis an incredibly data-heavy task.

Knowing this, how can one go back to a life of accepting the status quo, taking results at face value, and discarding treatments unnecessarily?