AARP Hearing Center

One of the most turbulent presidential election campaigns in American history is entering its final days.

But whomever you intend to vote for, the potential to spread election disinformation is highly troubling, more so in this burgeoning age of generative artificial intelligence (AI), the type of AI that can churn out words, computer code, pictures and video based on text or other prompts from a user.

Seismic advancements in AI are making it more difficult for you to figure out whether an announcement from candidates and their representatives is actually from the campaigns. Supporters on the political fringes — and trolls who like to stir up trouble in general — have more tools at their disposal than ever before.

To varying degrees, candidates have always made exaggerated claims, and meddling in elections dates to the earliest days of politics. But AI-enhanced tools, amplified by the speedy and vast reach of social media, are digital carcinogens that can sow doubt about what is or isn’t factual.

How to vote in your state

Learn more about absentee and early voting, ID requirements and registration in all 50 states, the District of Columbia, Puerto Rico and the U.S. Virgin Islands.

“The U.S. has confronted foreign malign influence threats in the past,” FBI Director Christopher Wray remarked at a national security conference. “But this election cycle, the U.S. will face more adversaries, moving at a faster pace and enabled by new technology.”

Alondra Nelson agrees. “Election hijinks are as old as time,” says the Princeton, New Jersey-based professor at the independent research Institute for Advanced Study and former acting director of the White House Office of Science and Technology Policy under President Biden. But with AI, “I do think there’s a step change from the past.”

The problem is both foreign and domestic and not just doctored text. Readily available online tools let anyone with even modest tech skills create bogus local news websites, clone voices, manipulate still photos and fabricate video that seems so authentic that it appears as if Candidate X appeared at a place he or she never set foot in and said something not ever uttered.

That dystopian future has begun. In January, an AI-generated robocall of Biden’s impersonated voice urged some 5,000 Democrats in New Hampshire not to vote in the primary. A political operative tied to former Democratic primary challenger Dean Phillips admitted to creating the call.

On Sept. 26, the Federal Communications Commission fined the operative, Steve Kramer, $6 million for the scheme.

“We need to call it out when we see it and use every tool at our disposal to stop this fraud,” FCC Chairwoman Jessica Rosenworcel said in a statement.

Learn more

Senior Planet from AARP has free online classes to help you discover more about artificial intelligence.

The Biden robocall exemplifies how technology can be deployed to widen existing divisions and suppress votes in certain communities, says Claire Wardle, a professor in the school of Public Health at Brown University in Providence, Rhode Island, and cofounder and codirector of the Information Futures Lab. The idea is that changing even a few minds could tilt the scales in a close election.

Warren Buffett is so concerned about disinformation and deepfakes that the billionaire investor had his company Berkshire Hathaway issue a statement: "In light of the increased usage of social media, there have been numerous fraudulent claims regarding Mr. Buffett's endorsement of investment products as well as his endorsement and support of political candidates. Mr. Buffett does not currently and will not prospectively endorse investment products or endorse and support political candidates."

Here's what we know this campaign season and what to look out for:

1. If the message seems off, it’s probably false

What lent a modicum of legitimacy to the Biden robocall is that call quality these days is far from perfect. So damage can be wrought “if it sounds enough like somebody,” Wardle says.

The smartest thing voters can do under such circumstances is apply the smell test.

“Does it really make sense to you that Joe Biden is making a call telling you not to vote in the primary?” asks Hany Farid, a University of California, Berkeley, digital forensics professor and member of Berkeley’s artificial intelligence lab. “Take a breath because the fact is whether you’re 50 and above or a Gen Z or a millennial, we do tend to move very fast on the internet.”

2. ‘Cheap fakes’ can be as effective as slick fakes

The budget version of a deepfake, created with Adobe Photoshop or other editing software rather than AI, can wreak havoc, too, Wardle says. Cheap fakes might alter when an image was captured, make an older candidate look more youthful or change the context entirely.

In 2020, manipulated video of Nancy Pelosi made the former speaker appear as if she were intoxicated and slurring her speech. It went viral.

More From AARP

Can You Separate Real Election Information From Fakes?

Test how well you can spot potential deception

How to Talk to Your Kids and Grandkids About AI

7 ways grownups and loved ones can explore together

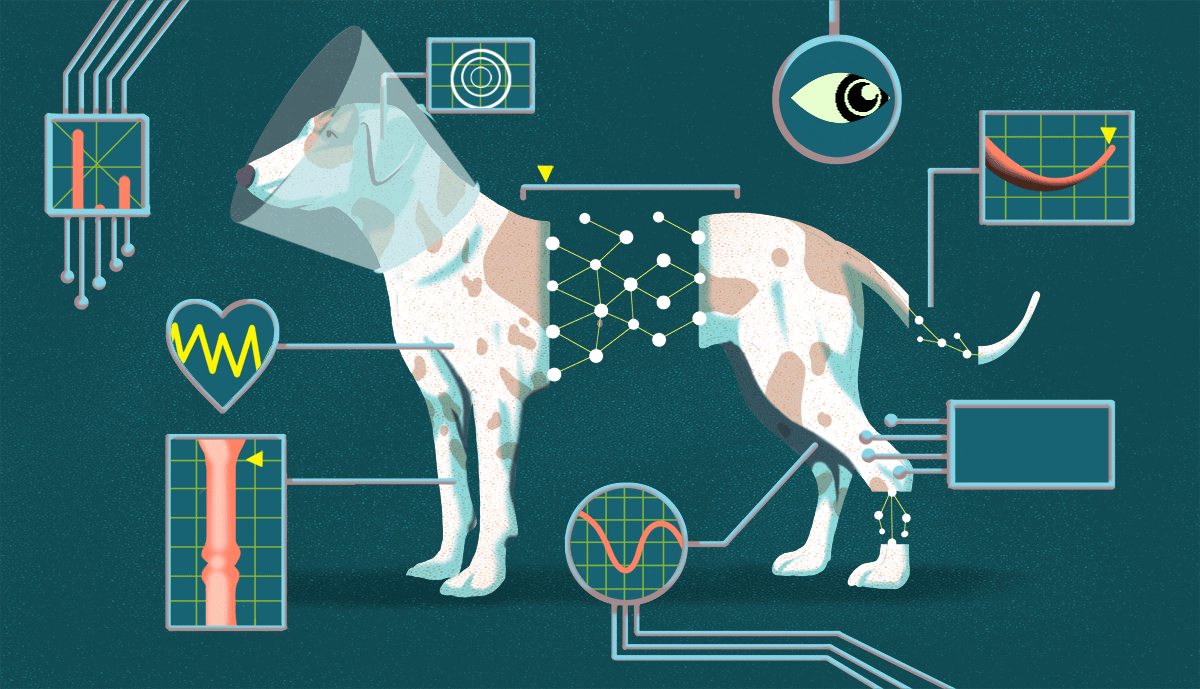

Your Veterinarian May Be Using AI to Treat Your Pet. Is That OK?

Artificial intelligence can make difficult diagnoses quickly, but there are downsides. Here’s what to know

Recommended for You