Motion User Guide

- Welcome

- What’s new in Motion

-

- Intro to basic compositing

-

- Intro to transforming layers

-

- Intro to transforming layers in the canvas

- Transform layer properties in the canvas

- Transform tools

- Change layer position, scale, or rotation

- Move a layer’s anchor point

- Add a drop shadow to a layer

- Distort or shear a layer

- Crop a layer

- Modify shape or mask points

- Transform text glyphs and other object attributes

- Align layers in the canvas

- Transform layers in the HUD

- Transform 2D layers in 3D space

-

- Intro to behaviors

- Behaviors versus keyframes

-

- Intro to behavior types

-

- Intro to Parameter behaviors

- Audio behavior

- Average behavior

- Clamp behavior

- Custom behavior

- Add a Custom behavior

- Exponential behavior

- Link behavior

- Logarithmic behavior

- MIDI behavior

- Add a MIDI behavior

- Negate behavior

- Oscillate behavior

- Create a decaying oscillation

- Overshoot behavior

- Quantize behavior

- Ramp behavior

- Randomize behavior

- Rate behavior

- Reverse behavior

- Stop behavior

- Track behavior

- Wriggle behavior

-

- Intro to Simulation behaviors

- Align to Motion behavior

- Attracted To behavior

- Attractor behavior

- Drag behavior

- Drift Attracted To behavior

- Drift Attractor behavior

- Edge Collision behavior

- Gravity behavior

- Orbit Around behavior

- Random Motion behavior

- Repel behavior

- Repel From behavior

- Rotational Drag behavior

- Spring behavior

- Vortex behavior

- Wind behavior

- Additional behaviors

-

- Intro to using generators

- Add a generator

-

- Intro to image generators

- Caustics generator

- Cellular generator

- Checkerboard generator

- Clouds generator

- Color Solid generator

- Concentric Polka Dots generator

- Concentric Shapes generator

- Gradient generator

- Grid generator

- Japanese Pattern generator

- Lens Flare generator

- Manga Lines generator

- Membrane generator

- Noise generator

- One Color Ray generator

- Op Art 1 generator

- Op Art 2 generator

- Op Art 3 generator

- Overlapping Circles generator

- Radial Bars generator

- Soft Gradient generator

- Spirals generator

- Spiral Drawing generator

- Use Spiral Drawing onscreen controls

- Star generator

- Stripes generator

- Sunburst generator

- Truchet Tiles generator

- Two Color Ray generator

- Save a modified generator

-

- Intro to filters

- Browse and preview filters

- Apply or remove filters

-

- Intro to filter types

-

- Intro to Color filters

- Brightness filter

- Channel Mixer filter

- Color Adjustments filter

- Color Balance filter

- Example: Color-balance two layers

- Color Curves filter

- Use the Color Curves filter

- Color Reduce filter

- Color Wheels filter

- Use the Color Wheels filter

- Colorize filter

- Contrast filter

- Custom LUT filter

- Use the Custom LUT filter

- Gamma filter

- Gradient Colorize filter

- HDR Tools filter

- Hue/Saturation filter

- Hue/Saturation Curves filter

- Use the Hue/Saturation Curves filter

- Levels filter

- Negative filter

- OpenEXR Tone Map filter

- Sepia filter

- Threshold filter

- Tint filter

-

- Intro to Distortion filters

- Black Hole filter

- Bulge filter

- Bump Map filter

- Disc Warp filter

- Droplet filter

- Earthquake filter

- Fisheye filter

- Flop filter

- Fun House filter

- Glass Block filter

- Glass Distortion

- Insect Eye filter

- Mirror filter

- Page Curl filter

- Poke filter

- Polar filter

- Refraction filter

- Ring Lens filter

- Ripple filter

- Scrape filter

- Sliced Scale filter

- Use the Sliced Scale filter

- Sphere filter

- Starburst filter

- Stripes filter

- Target filter

- Tiny Planet filter

- Twirl filter

- Underwater filter

- Wave filter

-

- Intro to Stylize filters

- Add Noise filter

- Bad Film filter

- Bad TV filter

- Circle Screen filter

- Circles filter

- Color Emboss filter

- Comic filter

- Crystallize filter

- Edges filter

- Extrude filter

- Fill filter

- Halftone filter

- Hatched Screen filter

- Highpass filter

- Indent filter

- Line Art filter

- Line Screen filter

- MinMax filter

- Noise Dissolve filter

- Pixellate filter

- Posterize filter

- Relief filter

- Slit Scan filter

- Slit Tunnel filter

- Texture Screen filter

- Vignette filter

- Wavy Screen filter

- About filters and color processing

- Publish filter controls to Final Cut Pro

- Using filters on alpha channels

- Filter performance

- Save custom filters

-

- Intro to 3D objects

- Add a 3D object

- Move and rotate a 3D object

- Reposition a 3D object’s anchor point

- Exchange a 3D object file

- 3D object intersection and layer order

- Using cameras and lights with 3D objects

- Save custom 3D objects

- Guidelines for working with 3D objects

- Working with imported 3D objects

-

- Intro to 360-degree video

- 360-degree projects

- Create 360-degree projects

- Add 360-degree video to a project

- Create a tiny planet effect

- Reorient 360-degree media

- Creating 360-degree templates for Final Cut Pro

- 360-degree-aware filters and generators

- Export and share 360-degree projects

- Guidelines for better 360-degree projects

-

- Intro to settings and shortcuts

-

- Intro to Keyboard shortcuts

- Use function keys

- General keyboard shortcuts

- Audio list keyboard shortcuts

-

- Tools keyboard shortcuts

- Transform tool keyboard shortcuts

- Select/Transform tool keyboard shortcuts

- Crop tool keyboard shortcuts

- Edit Points tool keyboard shortcuts

- Edit shape tools keyboard shortcuts

- Pan and Zoom tools keyboard shortcuts

- Shape tools keyboard shortcuts

- Bezier tool keyboard shortcuts

- B-Spline tool keyboard shortcuts

- Paint Stroke tool keyboard shortcuts

- Text tool keyboard shortcuts

- Shape mask tools keyboard shortcuts

- Bezier Mask tool keyboard shortcuts

- B-Spline Mask tool keyboard shortcuts

- Transport control keyboard shortcuts

- View option keyboard shortcuts

- HUD keyboard shortcuts

- Inspector keyboard shortcuts

- Keyframe Editor keyboard shortcuts

- Layers keyboard shortcuts

- Library keyboard shortcuts

- Media list keyboard shortcuts

- Timeline keyboard shortcuts

- Keyframing keyboard shortcuts

- Shape and Mask keyboard shortcuts

- 3D keyboard shortcuts

- Miscellaneous keyboard shortcuts

- Touch Bar shortcuts

- Move assets to another computer

- Work with GPUs

- Glossary

- Copyright

Match move an object in Motion

To use a Match Move behavior, you need at least two objects in your project: a source object and a destination object. Typically, the source is a video clip, but can also be an object animated by keyframes or behaviors. The source object provides movement data based on a tracking analysis of its video or its animation attributes. That movement data is then applied to the destination object. The destination object can be an image, a shape, text, a particle emitter, a camera, and so on.

Match move a destination object to a source object in a clip

In this workflow, the Match Move behavior tracks recognizable objects such as people or faces, animals, and cars in the source clip, then applies that resulting track data to a destination object. As a result, the destination object matches the movement of the tracked element in the source clip.

Import a source clip into your Motion project, then import a destination object (such as an image or clip), or add a destination object (such as a shape, particle emitter, or text).

The destination layer must be above the source video clip layer in the Layers list.

In the Layers list, select the destination object, click Behaviors in the toolbar, then choose Motion Tracking > Match Move.

Important: If you’re applying the Match Move behavior to a group, make sure the footage being analyzed resides outside of that group.

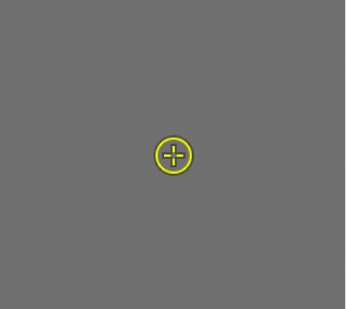

An object tracker conforming to the destination object is added to the canvas.

If necessary, change the shape or size of the object tracker.

In the Behaviors Inspector, click the Analysis Method pop-up menu, then choose one of the following options:

Automatic: Automatically chooses the most suitable analysis method. Because the best method is highly dependent on the properties of a clip and each use case, you may need to experiment with different analysis methods to achieve the best result.

Combined: Uses a combination of the Machine Learning and Point Cloud analysis methods (described below) to track position, scale, and rotation.

Machine Learning: Uses a machine learning model trained on a dataset to identify people, animals, and many other common objects, allowing the tracker to follow the subject in a specified region of video. Choose this option when absolute tracking precision isn’t required, such as when attaching titles or graphics to objects or people. This method can overcome moderate occlusion—when an object (such as a tree or car) briefly obscures the subject being tracked.

Point Cloud: Tracks a specific reference pattern and identifies how the pattern transforms from one frame to the next. Choose this option when you need more precise tracking of specific pixels. This method tracks position, scale, and rotation, and excels at tracking regions that are rigid and somewhat flat (from the camera’s point of view).

In the Behaviors Inspector, do one of the following:

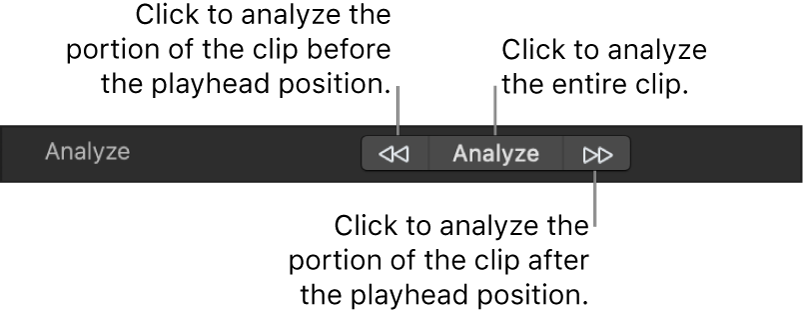

Analyze the entire clip: Click Analyze. The clip is analyzed forward from the playhead position to the end of the clip (or to the frame where the reference pattern can no longer be tracked), and then backward from the playhead position to the start of the clip.

Analyze the portion of the clip before the playhead position: Click the left arrows next to the Analyze button.

Analyze the portion of the clip after the playhead position: Click the right arrows next to the Analyze button.

The tracking analysis progress window displays the analysis method used for the track.

Tip: If you’re using the Machine Learning analysis method and observe jitter during the analysis (the onscreen object tracker bouncing or jumping from one size to another), try switching to the Point Cloud analysis method. Its tracker is much less susceptible to quick changes.

To stop the tracking analysis, click the Stop button in the progress window or press Esc.

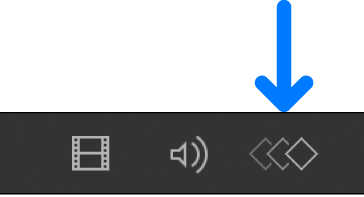

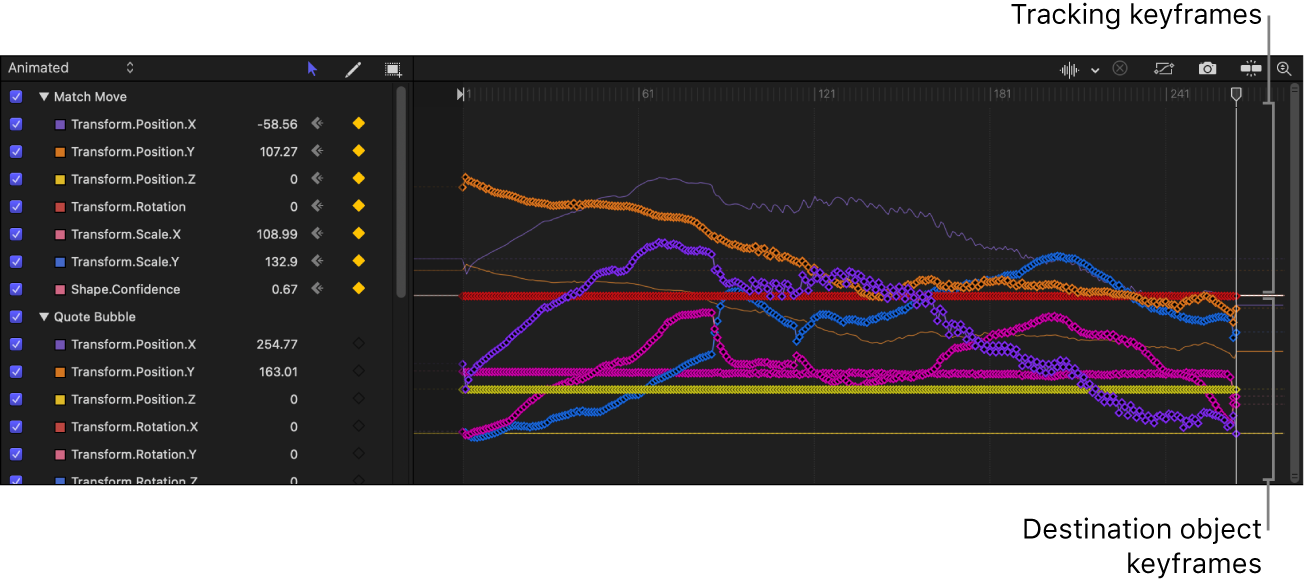

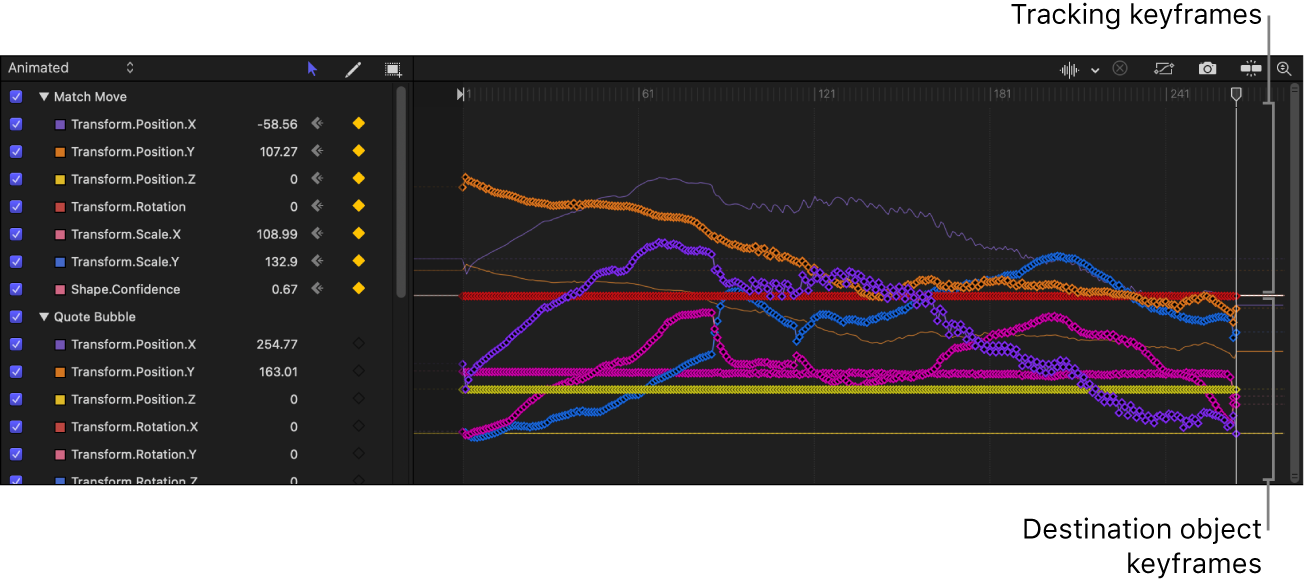

Tracking keyframes and the transformed object’s keyframes appear in the Keyframe Editor. If the Keyframe Editor is not visible, click the Show/Hide Keyframe Editor button in the lower-left corner of the Motion window.

A confidence curve is also displayed in the Keyframe Editor. This curve provides a visual indication of the tracker’s accuracy relative to its parameter settings in the Inspector. The confidence curve is for reference only and is not used for editing purposes.

The movement of the destination object is matched to the movement of the reference pattern analyzed in the clip, but you can still reposition the object in the canvas. To apply scale changes in the source object to the destination object, see the following task Adjust the scale of a match-moved object.

Adjust the scale of a match-moved object

When you match move a destination object to a source clip using Object mode tracking, changes in the size of the source object are not automatically applied to the destination object. Using the Scale Mode pop-up menu, you can match changes in scale of the source object to the destination object.

In Motion, complete steps 1–5 in the previous task, Match move a destination object to a source object in a clip.

In the Adjust parameter row of the Behaviors Inspector, select Scale.

Do one of the following:

Uniformly scale the destination object to the minimum dimension of the tracker region: Click the Scale Mode pop-up menu, then choose Scale All (Fit). If the destination object contains changes in scale, this setting scales the maximum size of the object to the minimum dimension of the tracker region.

Uniformly scale the destination object to the maximum dimension of the tracker region: Click the Scale Mode pop-up menu, then choose Scale All (Fill). If the destination object contains changes in scale, this setting scales the minimum size of the object to the maximum dimension of the tracker region.

Nonuniformly scale the destination object based on the size of the tracker region: Click the Scale Mode pop-up menu, then choose Scale X & Y. If the source object contains changes in scale, this setting may cause the destination object to be stretched or squashed.

Match move a destination object to points or patterns in a source clip

In this typical workflow, the Match Move behavior tracks points or patterns in the source clip, then applies that resulting track data to a destination object. As a result, the destination object matches the movement of the tracked element in the source clip.

Import a source video clip into your Motion project, then import a destination object (such as an image or clip), or add a destination object (such as a shape, particle emitter, or text).

The destination layer must be above the video clip layer in the Layers list.

In the Layers list, select the destination object, click Behaviors in the toolbar, then choose Motion Tracking > Match Move.

Important: If you’re applying the Match Move behavior to a group, make sure the footage being analyzed resides outside of that group.

By default, an object tracker conforming to the destination object is added to the center of the canvas.

In the Behaviors Inspector, click the Mode pop-up menu, then choose Point.

In the canvas, the object tracker changes to a point tracker. Because the default Match Move tracker records position data, it’s known as an anchor tracker.

Optional: To track more complex motion (such as a rotating element or an element with four corners), do one of the following:

Add a second point tracker to track additional rotation and scale data: In the Behaviors Inspector, select the Rotation-Scale checkbox (under the Anchor checkbox), then drag the new point tracker into position in the canvas. See Two-point tracking in Motion.

Add four point trackers to track an element with four corners: In the Behaviors Inspector, click the Type pop-up menu, choose Four Corners, then proceed to step 3 of Use four-corner tracking to pin a destination object to a source object.

To remove a point tracker, click Remove in the track row in the Behaviors Inspector.

Move the playhead to the frame where you want the track analysis to begin.

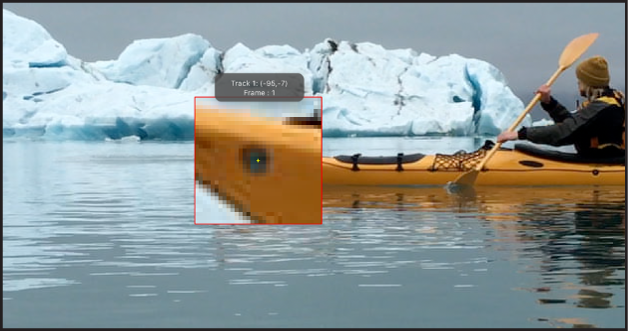

In the canvas, drag the tracker (or trackers) to the area (or areas) you want to track.

As you drag the tracker in the canvas, the region around the tracker becomes magnified to help you find a suitable reference pattern.

Note: For more information about adjusting the point tracker, see Adjust onscreen trackers in Motion.

In the Behaviors Inspector (or HUD), do one of the following:

Analyze the clip forward from the position of the playhead: Click Analyze.

Analyze the portion of the clip before the playhead position: Click the left arrows next to the Analyze button.

Analyze the portion of the clip after the playhead position: Click the right arrows next to the Analyze button.

The behavior analyzes the movement of the reference pattern in the video clip, then matches the movement of the destination object to it.

To stop the tracking analysis, click the Stop button in the progress window or press Esc.

The points in the canvas correspond to the tracking keyframes that appear in the Keyframe Editor. If the Keyframe Editor is not visible, click the Show/Hide Keyframe Editor button in the lower-left corner of the Motion window.

A confidence curve is also displayed in the Keyframe Editor. This curve provides a visual indication of the tracker’s accuracy relative to its parameter settings in the Inspector. The confidence curve is for reference only and is not used for editing purposes.

Match move using animation data from keyframes or behaviors

Because objects animated with keyframes or with behaviors already contain motion data, you can apply that data to a destination object using the Match Move behavior without performing a tracking analysis.

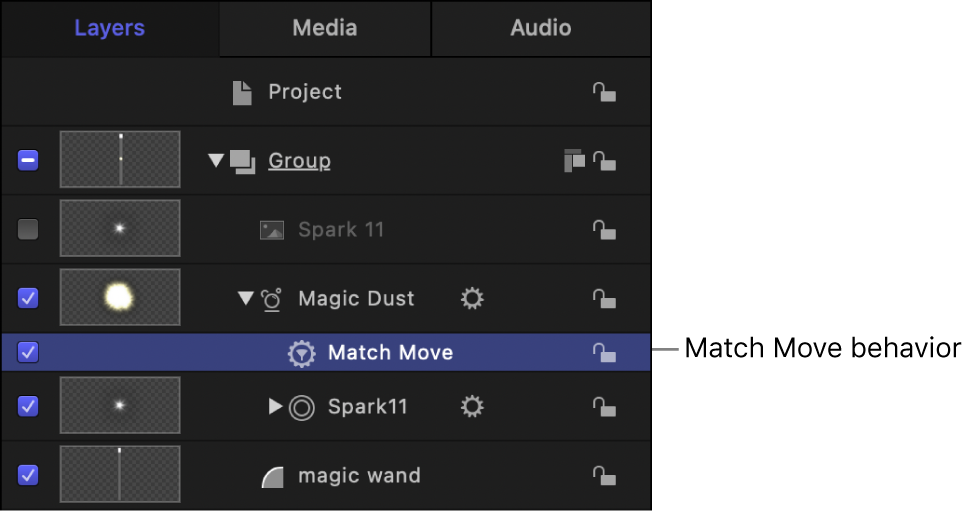

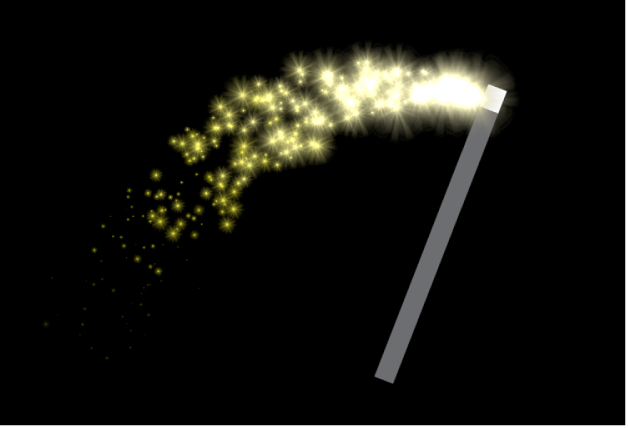

The following simple example uses a “magic wand” image (a rectangular shape) animated using the Spin behavior and the Magic Dust particle emitter (available in the Library). The Match Move behavior extracts the animation of the magic wand and applies it to the Magic Dust particle emitter to create the illusion of sparkles flying off the tip of the spinning wand.

In Motion, make sure the destination object (the particle emitter) is above the source object (the magic wand shape) in the Layers list.

In the Layers list, select the destination object, click Behaviors in the toolbar, then choose Motion Tracking > Match Move.

In the Layers list, the Match Move behavior appears directly under the particle emitter.

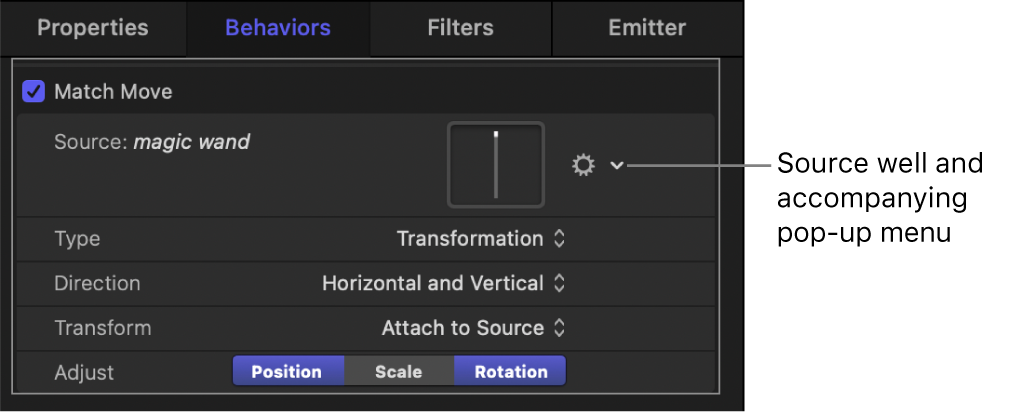

Because the animated source object (the spinning magic wand) is below the Match Move behavior in the Layers list, the source animation data is automatically loaded into the Source well in the Behaviors Inspector. If you don’t see the animated object in the Source well, drag the animated object from the Layers list into the Source well.

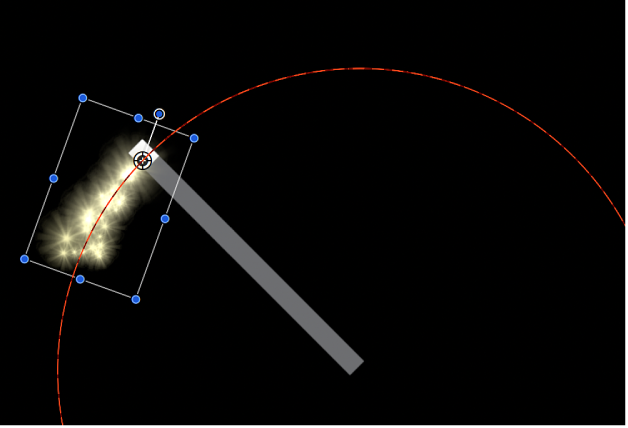

In the canvas, drag the destination object (the particle emitter) to the tip of the magic wand.

The motion path inherited from the source object appears in the canvas, attached to the destination object.

As a result, the particle emitter and the wand now share the same animation path.

Play the project (press the Space bar).

The particles match the movement of the wand.

Once you’ve match moved an object, you can still adjust the object (resize, rotate, scale, move, apply filters to, and so on). Tracking data is separate from an object’s own properties or transform parameters.

For a full description of Match Move parameters, see Match Move controls in Motion.

Download this guide: PDF