PostgreSQL is one of the most powerful database systems in the world. I have always been passionate about its great power, especially its modern SQL language features.

However, that doesn’t mean everything is great. There are areas where it hurts. Novice users unaware of the problem might get into trouble, and I see such things happen very often. This is a note for those who design their solutions around PostgreSQL using temporary tables.

Problem one: Bloated catalog and poor performance

PostgreSQL users often contact us about poor responsiveness from the database server. Not just one SQL running slow, but everything appears to be slow in the database. Generally, the investigation starts from the ground up, starting from the host machine. I use pg_gather to scan and quickly identify all problems at the PostgreSQL layer. A good percentage of such investigations end up placing a bloated catalog.

Generally, I expect all the catalog information of a typical PostgreSQL instance to be around 20 MB. The size depends on the number of objects in the database. Databases with many objects (tables, indexes, etc.), such as large numbers of partitioned tables with many partitions, are expected to have a bigger catalog. But if the size is way too big than expected, it should ring alarms.

Here are some examples of findings from pg_gather from real production environments:

Another one:

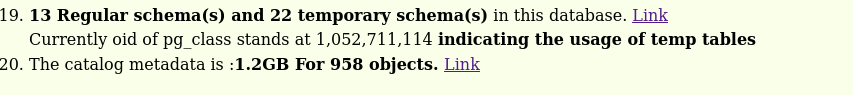

Yet another one with a 1.2GB catalog:

The worst I ever saw was a 40GB! Catalog.

Problem two: High autovacuum activity on catalog tables

In some cases, when we track the autovacuum activities using PostgreSQL logs (using log_autovacuum_min_duration), we may see too frequent autovacuum runs in those catalog tables

1 2 3 4 5 6 7 8 9 10 11 | 2024-12-26 11:48:50.795 UTC [1980] LOG: automatic vacuum of table "postgres.pg_catalog.pg_attribute": index scans: 1 pages: 0 removed, 15015 remain, 14977 scanned (99.75% of total) tuples: 344581 removed, 3664 remain, 569 are dead but not yet removable removable cutoff: 449706, which was 16999 XIDs old when operation ended new relfrozenxid: 449706, which is 64253 XIDs ahead of previous value frozen: 0 pages from table (0.00% of total) had 0 tuples frozen index scan needed: 14960 pages from table (99.63% of total) had 1286231 dead item identifiers removed index "pg_attribute_relid_attnam_index": pages: 8946 in total, 4780 newly deleted, 8342 currently deleted, 8342 reusable index "pg_attribute_relid_attnum_index": pages: 6768 in total, 3531 newly deleted, 6193 currently deleted, 6193 reusable avg read rate: 29.354 MB/s, avg write rate: 25.649 MB/s … |

A closer look at PostgreSQL reveals the catalog tables affected by temporary tables. Mainly, they are:

1 2 3 4 5 6 | pg_catalog.pg_attribute pg_catalog.pg_class pg_catalog.pg_constraint pg_catalog.pg_depend pg_catalog.pg_index pg_catalog.pg_type |

Occasionally, tables like pg_catalog.pg_statistic also come up for cleanup. There are cases where the autovacuum ran several hundreds of times every hour.

If someone is using temporary tables extensively, that will create sufficient work for autovacuum workers. Not to mention the volume of IO all these are causing.

Problem three: High DDL activity and application design

Architects are often surprised when they hear that each application session needs to issue a DDL “CREATE TEMPORARY TABLE …” as the first step before the application can use the temporary table. It is hard to convince someone that hundreds of sessions need to be running DDLs parallelly through multiple sessions. This not only causes the modification/redesign of the application logic but also causes notable performance degradation.

Problem four: Ineffective connection pooling

The temporary tables in PostgreSQL have life within a specific connection/session. That means that good connection pooling is not possible. Each session has to create its temporary tables. There is no assurance that the application will get the same connection where the temporary table was created once the connection is released to the pool. External connection pooling solutions like pgBouncer clearly document this limitation: https://www.pgbouncer.org/features.html

Application logic has no other option than using “session mode” pooling. However, practically, I see most applications hold the connection they created until the application shuts down. So, effectively, session mode pooling is very close to no connection pooling.

Problem five: Flooding the PostgreSQL logs and audit logs

Yet another side effect of using temporary tables is that it might flood the PostgreSQL log with DDLs. Most production systems will have the parameter setting log_statement=’ddl’ or other means of DDL auditing. This will cause all the temporary table creations to be logged into PostgreSQL logs. The end result is substantial log files that are difficult to analyze.

Problem six: Helpless autovacuum

The temporary table stores the xid like regular tables and gets bloated. But autovacuum (or manual vacuum) cannot do anything on the temporary table.

Generally, we love to see long-lived connections to databases (instead of frequent connections and disconnections). But such long-lived connections could hold some temporary table with an old xid reference. The worst thing could be the system approaching Transaction Wraparound conditions, triggering alerts, and getting called late at night.

Why is this happening?

One of the common questions I hear is, “We have been using temporary tables in XYZ SQL database, and there was no problem. Why is this happening in PostgreSQL?”

The difference is in the design choice and implementation. Temporary tables in PostgreSQL allow every session to create a temporary table with the same name but possibly with a different structure. This is a significant flexibility, but it comes with its cost. This means that every session needs to have its own temporary schema to hold the temporary table. So, all temporary tables will be created in a separate schema mapped to each session. This is the source of additional metadata generation. Then PostgreSQL will insert the temporary table information into all catalog tables (pg_class, pg_attribute, etc) just like a regular table. So, obviously, these are DDL operations, and there will be more DML operations on those catalog tables.

Temporary tables are automatically dropped at the end of a session or, optionally, at the end of the current transaction, causing dead tuples in the catalog tables. Moreover, this design forces the users to create temporary tables every time, repeatedly in each session, causing a lot of DDL activity in the system.

Other problems/limitations of temporary tables

As per standards, GLOBAL or LOCAL can be specified while creating the TEMPORARY table. But PostgreSQL does not distinguish between them; it just ignores them.

The PostgreSQL community is well aware of all the problems, which are well documented.

Reference: https://www.postgresql.org/docs/current/sql-createtable.html#SQL-CREATETABLE-COMPATIBILITY

It continues:

“The SQL standard also distinguishes between global and local temporary tables, where a local temporary table has a separate set of contents for each SQL module within each session, though its definition is still shared across sessions. ”

So, PostgreSQL allows user sessions to have temporary tables with the same name but different table structures. Practically, I have yet to see a case where such flexibility is very useful. So, effectively, PostgreSQL has deviated from standards and destroyed the real usability of temporary tables for something less useful.

Summary

Catalog information is frequently used by PostgreSQL for every stage of SQL processing, Starting from the identification of tables and columns, permissions, statistics, etc. A lean and trim catalog is essential for performance; on the other hand, a bloated catalog is terrible for performance.

Using temporary tables is the most frequent cause of bloated catalogs, causing serious performance degradation. If some app design has to use temporary tables, there is not much option than flooding the system with repeated DDLs.

Not every catalog bloat case is caused by temporary tables. Consolidating many schemas into a single database may be another common reason for a big catalog and poor performance. Here is an example of such a consolidation environment.

PostgreSQL is free and open, so there is not much benefit in consolidating everything into a single database (unlike proprietary software). Consolidation comes with many challenges and costs.

In my observation, PostgreSQL’s current temporary table implementation is not of great value or sustainable for heavy production use. It has many practical difficulties. I recommend avoiding it as much as possible in your design/architecture and looking for workarounds.

If the catalog is already bloated and performance suffers, running VACUUM FULL on the catalog tables is the solution. Taking a momentary lock and running the VACUUM FULL on catalog tables is the most practical method to eliminate the bloat. There are users who switched to UNLOGGED tables with a custom implementation of the temporary table. There is an extension exits (pgtt) that implements Oracle-like Global temporary tables using UNLOGGED tables. There have been multiple efforts in the PostgreSQL community to implement a better Temporary Table, including this commitfest item: https://commitfest.postgresql.org/26/2349/

I hope PostgreSQL will get that soon, as it will make life easier for all Architects/Designers, Developers, and DBAs.

Our PostgreSQL Performance Tuning eBook condenses years of database expertise into a practical guide for optimizing your PostgreSQL databases. Inside, you’ll discover our most effective PostgreSQL performance strategies derived from real-world experience.

This is golden. Little gold nuggets of knowledge. Thank you

Thank you, Honk, for this feedback