Abstract

Rapid technological advances have led to the production of different types of biological data and enabled construction of complex networks with various types of interactions between diverse biological entities. Standard network data analysis methods were shown to be limited in dealing with such heterogeneous networked data and consequently, new methods for integrative data analyses have been proposed. The integrative methods can collectively mine multiple types of biological data and produce more holistic, systems-level biological insights. We survey recent methods for collective mining (integration) of various types of networked biological data. We compare different state-of-the-art methods for data integration and highlight their advantages and disadvantages in addressing important biological problems. We identify the important computational challenges of these methods and provide a general guideline for which methods are suited for specific biological problems, or specific data types. Moreover, we propose that recent non-negative matrix factorization-based approaches may become the integration methodology of choice, as they are well suited and accurate in dealing with heterogeneous data and have many opportunities for further development.

Keywords: data fusion, biological networks, non-negative matrix factorization, systems biology, omics data, heterogeneous data integration

1. Introduction

One of the most studied complex systems is the cell. However, its functioning is still largely unknown. It comprises diverse molecular structures, forming complex, dynamical molecular machinery, which can be naturally represented as a system of various types of interconnected molecular and functional networks (see figure 1 for an illustration). Recent technological advances in high-throughput biology have generated vast amounts of disparate biological data describing different aspects of cellular functioning also known as omics layers.

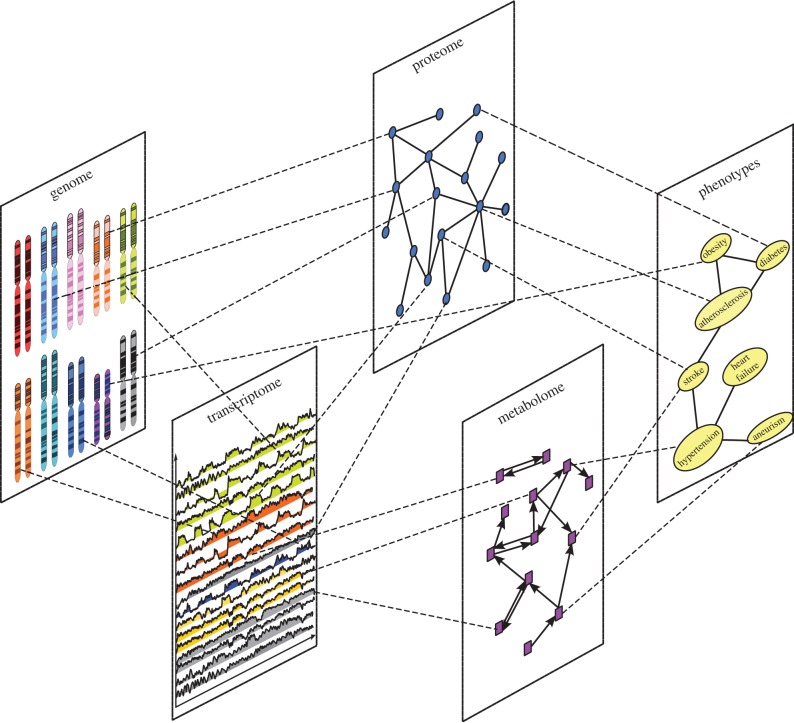

Figure 1.

A schematic illustration of the molecular information layers of a cell.

For example, yeast two-hybrid assays [1–7] and affinity purification with mass spectrometry [8,9] are the most widely used high-throughput methods for identifying physical interactions (bonds) among proteins. These interactions, along with the whole set of proteins, comprise the proteome layer. Other experimental technologies, such as next-generation sequencing [10–13], microarrays [14,15] and RNA-sequencing technologies [16–18], have enabled construction and analyses of other omics layers. Figure 1 illustrates these layers and their constituents: genes in the genome, mRNA in the transcriptome, proteins in the proteome, metabolites in the metabolome and phenotypes in the phenome. It illustrates that the mechanisms by which genes (in the genome layer) lead to complex phenotypes (in the phenome layer) depend on all intermediate layers and their mutual relationships (e.g. protein–DNA interactions).

It has largely been accepted that a comprehensive understanding of a biological system1 can come only from a joint analysis of all omics layers [19,20]. Such analysis is often referred as data (or network) integration. Data integration collectively analyses all datasets and builds a joint model that captures all datasets concurrently. A starting point of this analysis is to use a mathematical concept of networks to represents omics layers. A network (or a graph) consists of nodes (or vertices) and links (or edges). In biological networks, nodes usually represent discrete biological entities at a molecular (e.g. genes, proteins, metabolites, drugs, etc.) or phenotypic level (e.g. diseases), whereas edges represent physical, functional or chemical relationships between pairs of entities [21]. For the last couple of decades, networks have been one of the most widely used mathematical tools for modelling and analysing omics data [22]. In particular, these tools applied to studies of protein–protein interaction (PPI) networks [23,24], gene interaction (GI) networks [25–27], metabolic interaction (MI) networks [28–31] and gene co-expression (Co-Ex) networks [32,33] have extracted valuable biological information from these different views of molecular machinery inside a cell. However, a more complete understanding of a biological system is expected to be achieved by a joint, integrative analysis of all these networks. Constructing an integrated network representation that best captures all gene–gene associations by using all molecular networks has been one of the major challenges in network integration2 [34,35].

1.1. The need for data integration

Abundance of biological data has made data integration approaches increasingly popular over the past decade. Understanding cellular process and molecular interactions by integrating molecular networks has just been one of the challenges of data integration. In addition to molecular network data, accumulation of other data types has created a need for development of data integration methods that can address a wide range of biological problems and challenges. Some examples of these data include protein and genome sequence data [36], disease data from genome-wide association studies (GWAS) [12,13,37,38], mutation data from The Cancer Genome Atlas (TCGA) [39], copy number variation (CNV) data [40], functional annotation and ontology data, such as gene ontology (GO) [41] and disease ontology (DO) [42], protein structure data [43], drug chemical structure and drug–target interaction (DTI) data [44–46]. These data represent a valuable complement to the molecular networks and they are often incorporated into various data integration frameworks to increase reliability of newly discovered knowledge.

Because the literature on these topics is vast, we chose to mainly focus on the following currently foremost data integration problems:

— Network inference and functional linkage network (FLN) construction. Network inference is one of the major problems in systems biology aiming to understand GIs and their mutual influence [47]. It aims to construct network topology (or wiring between genes) based on the evidence from different data types. The special focus in the literature has been on the inference of gene regulatory networks (GRNs), whose nodes are genes and edges are regulatory interactions [48]. Standard methods for GRN inference have mostly been based on gene expression data. However, integration of expression data with other data types has been shown to improve the GRN inference process [49]. Unlike GRN, FLN captures all possible gene associations, constructed from multiple data types. FLN has demonstrated its potential in studying human diseases and predicting novel gene functions and interactions [35,50].

— Protein function and PPI prediction. Protein function (also known as protein annotation) prediction has been demonstrated to be a good alternative to the time-consuming experimental protein function characterization. It aims to computationally assign molecular functions to unannotated proteins. The accuracy of these methods has largely improved with the use of integration methods that can incorporate multiple different biological data conveying complementary information about protein functions [51–55]. Also, interactions between proteins are important for understanding intracellular signalling pathways and other cellular process. However, PPI network structure for many species is still largely unknown and therefore, many computational techniques for PPI interaction prediction have been proposed. Much attention has been paid to the data integration methods capable of inferring new PPIs by integrating various types of heterogeneous biological data [50,56,57].

— Disease gene prioritization and disease–disease association prediction. Prioritization of disease-causing genes is a problem of great importance in medicine. It deals with the identification of genes involved in a specific disease and providing a better understanding of gene aberrations and their roles in the formation of diseases [58]. However, a majority of diseases are characterized with a small number of known associated genes and experimental methods for discovering new disease-causing genes are expensive and time-consuming [59]. Therefore, computational methods for prioritization of disease genes have been proposed. Among the most popular methods are data integration methods owing to their ability to improve the reliability of prioritization by using multiple biological data sources [35,60]. Also, predicting associations between diseases is of great importance. Current disease associations are mainly derived from similarities between pathological profiles and clinical symptoms. However, the same disease could lead to different phenotype manifestations and thus, to inaccurate associations with other diseases. Integration of molecular data has been shown to lead to better and more accurate disease–disease associations [61].

— Drug repurposing. It aims to find new uses for existing drugs and thereby drastically reduce the cost and time for new drug discovery [62]. Accumulation of various biological data involving interactions between drugs, diseases and genes, and protein structural and functional similarities, provide us with new opportunities for data integration methods to generate new associations between diseases and existing drugs [63].

— Patient-specific data integration. It attempts to integrate patient-specific clinical (e.g. patient's history, laboratory analysis, etc.), genetic (e.g. somatic mutations) and genomic data (e.g. gene expression data from healthy and diseased tissues). Such approaches are contributing to the development of the nascent field of precision medicine, which aims to understand an individual patient's disease on the molecular level and hence, propose more precise therapies [64]. Data integration methods have started contributing to this growing field [65]. Here, we present data integration methods capable of jointly analysing clinical, patient- and disease-specific data to classify patients into groups with different clinical outcomes and prognoses, hence data integration methods contribute to improving therapies.

1.2. Computational challenges of data integration

The main goal of any data integration methodology is to extract additional biological knowledge from multiple datasets that cannot be gained from any single dataset alone. To reach this goal, data integration methodologies have to meet many computational challenges. These challenges arise owing to different sizes, formats and dimensionalities of the data being integrated, as well as owing to their complexity, noisiness, information content and mutual concordance (i.e. the level of agreement between datasets).

A number of current data integration methods meet some of these challenges to some extent, whereas the majority of them hardly meet any of them. A reason is that many data integration approaches are based on the methods designed for analysing one data type, and they are further adopted to deal with multiple data types. Thus, these methods often suffer from various limitations when applied to multiple data types. For example, in terms of network integration, standard methods for network analysis fail to simultaneously take into account connectivity structure (topology) of multiple different networks along with capturing the biological knowledge contained in them. They are based on different types of transformation methods to project, or merge multiple networks into a single, integrated network on which further analysis is performed [34,66–68]. Their limitations will be explained later in this article. However, more sophisticated network-based (NB) methods use either random walk or diffusion processes [69–73] to simultaneously explore connectivity structures (topologies) of multiple different networks and to infer integrated biological knowledge from all networks concurrently.

However, a majority of data integration studies are based on methods from machine learning (ML) owing to their ability to integrate diverse biological networks along with other biological data types. Namely, the basic strategy has been to use standard ML methods and extend them to incorporate disparate data types.

In this article, we provide a review of the methodologies for biological data integration and highlight their applications in various areas of biology and medicine. We compare these methods in terms of the following computational challenges:

— different size, format and dimensionality of datasets,

— presence of noise and data collection biases in datasets,

— effective selection of informative datasets,

— effective incorporation of concordant and discordant datasets, and

— scalability with the number and size of datasets.

We identify these computational challenges to be the most important, as every data integration methodology aims to address them at least to some extent.

1.3. Focus of this article

There are a number of review articles that cover related topics from different perspectives, or with a special focus on a particular biological problem. For example, Rider et al. [74] focus on methods for network inference with a special focus on probabilistic methods. The authors also argue about the need for standardized methods and datasets for proper evaluation of integrative methods. Kim et al. [75] focus on methods for construction and reconstruction of biological networks from multiple omics data, as well as on their statistical analysis and visualization tools. Bebek et al. [76] cover integrative approaches for identification of biomarkers and their implications in clinical science. They mostly focus on methods from network biology. Hamid et al. [77] proposе a conceptual framework for genomic and genetic data integration and review integration methods with a focus on statistical aspects. Kristensen et al. [78] review integrative methods applied in cancer research. They provide a comprehensive list of current tools and methods for genomic analyses in cancer.

In this paper, we focus on NB and to a large extent on ML data integration methods that have a wide range of applications in systems biology and a wide spectrum of data types that they can integrate. In particular, we focus on the state-of-the-art kernel-based (KB) methods [79], Bayesian networks (BNs) [80] and non-negative matrix factorization (NMF) [81] methods owing to their prominent applications in systems biology, as they can integrate large sets of diverse, heterogeneous biological data [82].

Note that there are many other data integration approaches that do not fall into the biological or methodological categories which we focus on in this review paper. Some of them include integration of multiple omics data for analysis of condition-specific pathways [83], integrative approaches for detecting modular structures in networks [84,85], integrated statistical analysis of multiple datasets [86], etc. Nevertheless, the methods and biological problems reviewed here cover a wide spectrum of foremost topics in systems biology. We also provide guidance on choosing suitable methods for integrating particular data. This is important as thus far there is no consensus (or guidelines) on what integration method should be used for a biological problem at study. Many of the existing review papers fail to provide answers to these questions. Here, we highlight the advantages and disadvantages of the most widely used data integration methods and provide an insight into which method should be used for which type of biological problem and applied on which type of homogeneous or heterogeneous data (see table 2 and §3 for more details). Some of these methods are also given as tools and can be useful to domain scientists. Please note that many of the existing reviews focus only on data integration methodologies in a specific biological domain, or on specific type of data. Thus, our review is comprehensive, as it covers a wide range of methodologies for data integration, as well as network and data types commonly used in data integration studies.

Table 2.

Summary of methods for data integration. See §§2 and 3 for abbreviations.

| method name | biological problem | data (network) types | approach | integration type | integration strategy | reference |

|---|---|---|---|---|---|---|

| NeXO | GO inference | PPI, GI, Co-Ex and YeastNet | NB | homogeneous | early | Dutkowski et al. [66] |

| GeneMANIA | gene function prediction | — | NB | homogeneous | early | Mostafavi & Morris [124] |

| MRLP | disease association prediction | DSN, GDA | NB | heterogeneous | early | Davis & Chawla [68] |

| PRINCE | disease–gene prioritization | PPI, DSN, GDA | NB | heterogeneous | intermediate | Vanunu et al. [72] |

| NBI | drug–target prediction | DCS, PSeqS, DTI | NB | heterogeneous | intermediate | Cheng et al. [70] |

| — | drug–disease association inference | DSN, DCS, Co-Ex | NB | heterogeneous | intermediate | Huang et al. [73] |

| — | gene-regulatory network inference | eQTL, GExD, TFBS and PPI | BN | heterogeneous | intermediate | Zhu et al. [125], Zhang et al. [126] |

| MAGIC | gene function prediction | PPI, GI, GExD and TFBS | BN | heterogeneous | intermediate | Troyanskaya et al. [54] |

| — | PPI prediction and FLN construction | PPI, Co-Ex, GO | BN | heterogeneous | late | Jansen et al. [127] |

| — | FLN construction | — | BN | heterogeneous | late | Linghu et al. [35], Lee et al. [50] |

| — | cancer prognosis prediction | clinical, GExD | BN | heterogeneous | intermediate | Gevaert et al. [128], van Vliet et al. [129] |

| PSDF | cancer prognosis prediction | GExD, CNV | BN | homogeneous | intermediate | Yuan et al. [130] |

| — | protein function prediction/classification | PSeqS, Co-Ex, PPI | KB | homogeneous | intermediate | Lanckriet et al. [51,52] |

| — | drug repurposing | DCS, DTI, PPI and GExD | KB | heterogeneous | intermediate | Napolitano et al. [63] |

| PreDR | drug repurposing | DCS, DTI, PPI and DSES | KB | heterogeneous | intermediate | Wang et al. [131] |

| — | network inference | GeXD, PPI, GO and PhylProf | KB | homogeneous | intermediate | Kato et al. [132] |

| KCCA | network inference | PPI, GexD, GO and PhylProf | KB | homogeneous | early | Yamanishi et al. [133] |

| — | cancer prognosis prediction | clinical, GExD | KB | heterogeneous | early | Daemen et al. [134] |

| DFMF | disease association prediction | PPI, GI, Co-Ex, CS, MI, DTI, GO, GA, DO, DSES and GDA | NMF | heterogeneous | intermediate | Žitnik et al. [61], Žitnik & Župan [135] |

| — | GO inference and gene function prediction | PPI, GI, Co-Ex, YeastNet, GO and GA | NMF | heterogeneous | intermediate | Gligorijević et al. [55] |

| — | PPI prediction | PStrS, GexF, PSeqS | NMF | homogeneous | intermediate | Wang et al. [57] |

| R-NMTF | GDA prediction | PPI, DSN, GDA | NMF | heterogeneous | intermediate | Hwang et al. [60] |

Furthermore, we identify deficiencies of particular methods when applied to multiple data types and point out possible mathematical and algorithmic improvements that can be undertaken to address the challenges listed in §1.2. Unlike other reviews, we also provide basic theoretical concepts of these methods that can familiarize the domain scientist with the basic computational concepts that the methods are based on and also serve as a starting point for possible methodological improvements. Moreover, to the best of our knowledge, this review is the first to present very recently proposed NMF methods for biological data integration and compare them with other ML data integration methods. We demonstrate many advantages of these methods over existing ML methods and propose their further improvement.

The paper is organized as follows. In §2, we introduce basic graph theoretic concepts for representing the data and publicly available data repositories. In §3, we survey the methods for data integration, with a detailed focus on NB, KB, BN and NMF methods. We first provide a brief introduction of these methods, followed by their extensions for data integration. We also highlight their advantages and disadvantages and provide directions for their further improvement. A discussion on future research directions is given in §4.

2. Biological data and network representation

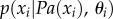

Biological networks have revolutionized our view of biological systems and disease, as they enabled studies from a systems-level perspective. A network (or a graph), usually denoted as G = (V, E), consists of a set of nodes, V, and set of edges, E [87]. Depending on the type of data they represent, network edges can be directed or undirected, weighted or unweighted [88]. For example, an edge in a PPI network is undirected, as it represents a physical bond between two proteins, whereas an edge in a metabolic network is directed, as it represents a chemical reaction that converts one metabolite into another. Networks can also be used to model relations between different types of biological entities, such as genes and diseases. Such relations are usually represented by using bipartite networks. Namely, a bipartite (or in a more general case, a k-partite) network consists of two (or k) disjoint sets of nodes (partitions) and a set of edges connecting nodes between different partitions. For example, gene–disease associations (GDAs) are represented by a bipartite network. Combinations of general and bipartite network representations are usually used to link multiple types of networks into a single, complex, heterogeneous, multi-relational network. For example, in many network integration studies, a gene–gene association network and a disease–disease association network are linked by a GDA bipartite network, jointly forming a complex, heterogeneous, multi-relational network (see figure 2a for an illustration) [68].

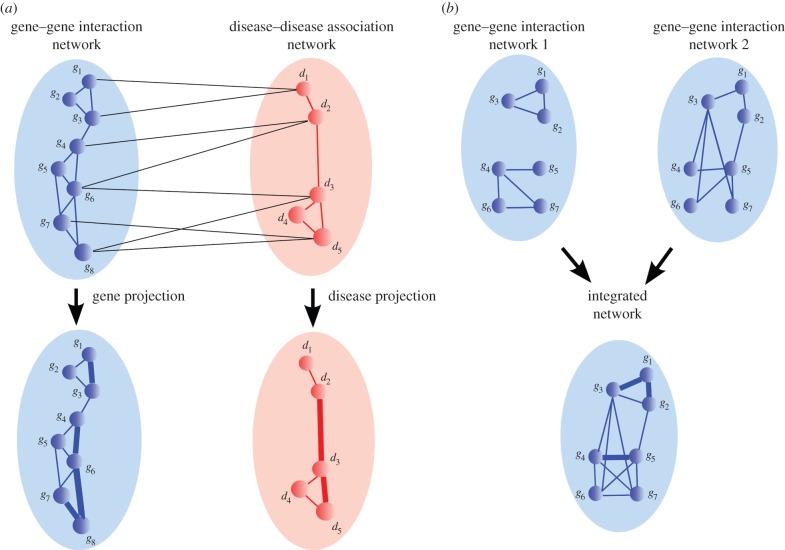

Figure 2.

(a) An illustration of a heterogeneous network composed of a gene–gene interaction network (blue), a disease–disease association network (red) and a gene–disease association network (black edges). A simple integrated network is obtained via either gene, or disease projection method (see details in §3). The thickness of an edge in a projected network illustrates its weight. (b) An illustration of homogeneous gene–gene interaction networks. An integrated network is constructed by using a simple data merging method (see text in §3 for details).

A network connectivity pattern is often represented by an adjacency matrix [87]: for an undirected network G = (V, E), its adjacency matrix, A, is a square matrix of size  , where each row and column denotes a node and entries in the matrix are either Aij = 1, if nodes i and j are connected, or Aij = 0, otherwise. In the case of a weighted network, instead of binary values, entries in an adjacency matrix are real numbers representing the strengths of associations between the nodes. The Laplacian matrix of G, denoted as L, is another mathematical concept widely used in spectral graph theory [89] and semi-supervised ML problems [90]. It is defined as: L = D – A, where D is the diagonal degree matrix; that is, entries on the diagonal, Dii, are node degrees (the degree is the number of edges connected to a node), whereas off-diagonal entries, Dij, i ≠ j, are zeros.

, where each row and column denotes a node and entries in the matrix are either Aij = 1, if nodes i and j are connected, or Aij = 0, otherwise. In the case of a weighted network, instead of binary values, entries in an adjacency matrix are real numbers representing the strengths of associations between the nodes. The Laplacian matrix of G, denoted as L, is another mathematical concept widely used in spectral graph theory [89] and semi-supervised ML problems [90]. It is defined as: L = D – A, where D is the diagonal degree matrix; that is, entries on the diagonal, Dii, are node degrees (the degree is the number of edges connected to a node), whereas off-diagonal entries, Dij, i ≠ j, are zeros.

Network wiring (also called topology) has intensively been studied over the past couple of decades by using methods from graph theory and statistical physics [88]. The first studies of molecular networks have shown that many molecular networks are characterized by a complex, scale-free structure. Namely, they have a small number of highly connected nodes (hubs) whose removal disconnects the network and a large number of low-degree nodes [91]. Unlike the structure of random networks, such scale-free structures indicate that molecular networks emerge as a result of complex, dynamical processes taking place inside a cell. This property has been exploited in devising null models of these networks [92–94].

Over the past couple of decades, a variety of mathematical tools for extraction of biological knowledge from real-world molecular networks have been proposed. In this article, we do not provide a review of these methods because they are mainly used for single-type network analyses. For a recent review of these methods, we refer the reader to reference [95]. Here, we present a brief description of biological networks commonly used in network and data integration studies and the procedures for their construction. We refer the reader to reference [82] for more details.

Based on the criteria under which the links in the networks are constructed, we divide biological networks into the following three classes (see table 1 for a summary of data types and data repositories):

- Molecular interaction networks. They include the following network data types.

- — A PPI network consists of proteins (nodes) and physical bonds between them (undirected edges). A large number of studies have dealt with detection and analysis of these types of interactions in different species [23,105]. As proteins are coded by genes, a common way to denote nodes in a PPI network is by using gene notations. Such notations are more common, as they allow a universal representation of all molecular networks and enable their comparison and integration.

- — An MI network contains all possible biochemical reactions that convert one metabolite into another, as well as regulatory molecules, such as enzymes, that guide these metabolic reactions [106]. A common way to represent a metabolic network is by representing enzymes as nodes and two enzymes are linked if they catalyse (participate in) the same reaction [107]. Because enzymes are proteins, they can be denoted by a gene notation.

- — A DTI network is a bipartite network representing physical bonds between drug compounds in one partition and target proteins in the other [108]. Many databases containing curated DTIs from the scientific literature have been published.

- Functional association networks. They include the following network data types.

- — A GI network is a network of genes representing the effects of paired mutations onto the phenotype. Two genes are said to exhibit a positive (negative) GI if their concurrent mutations result in a better (worse) phenotype than expected by mutations of each one of the genes independently [21,27]. GIs may not represent physical ‘interactions' between the proteins, but their functional associations.

- — A GDA network is a bipartite network representing associations between diseases in one partition and disease-causing genes in the other partition.

- — Ontology networks (ONs) are valuable biological components in many network integration studies [61] and they are often integrated with other molecular network data. The two commonly used ontologies in network data integration literature are GO, which unifies the knowledge about functioning of genes and gene products [41], and DO, which unifies the knowledge about relationships between diseases [42]. The hierarchical structure of the ontologies is represented as a directed acyclic graph (DAG)—a graph with no cycles, where nodes represent GO terms (or DO terms) and edges represent parent–child semantic relations.

- Functional and structural similarity networks. They include the following network data types.

- — A gene Co-Ex network represents correlations between gene expression levels across different samples, or experimental conditions. Usually, Pearson correlation coefficient (PCC) between all pairs of genes is computed based on their vectors of expression levels across different samples, or experimental conditions. Then, using a statistical method, a significant value of PCC (threshold) is determined. Two genes are linked if their PCC is higher than the threshold value. The choice of the threshold greatly influences the resulting topology of the Co-Ex network. Therefore, it is essential to properly determine the appropriate threshold. For that purpose, a couple of methods have been proposed. Some methods use prior biological knowledge (e.g. known functional associations) to constrain the network construction [109]; others use statistical comparison with randomized expression data [110], or random matrix theory [111]. However, these studies do not account for the number of experimental conditions (the length of the vector of expression profiles), which has been shown to greatly influence the choice of the correlation threshold [112]. Hence, methods to overcome such limitations have been proposed. They rely on a combination of partial correlation [113] and information theory [114] approaches to determine a local correlation threshold for each pair of genes. Unlike the single correlation threshold applied across the entire network, this approach allows for identification of more meaningful gene-to-gene associations [112].

- — A drug chemical similarity (DCS) network represents similarities and differences between drugs' chemical structures. Each drug is composed of chemical substructures, which define its chemical fingerprint. These chemical fingerprints are usually represented by binary vectors whose coordinates encode the presence (‘1’) or absence (‘0’) of a particular substructure from a set of all known substructures. The chemical similarity between two drugs is computed based on the similarity between these vectors. Various measures for computing the similarity have been proposed [115,116]. A commonly used one is the Tanimoto coefficient [115]. The procedure for network construction is similar to that of Co-Ex networks. First, the statistically significant similarity threshold is determined, and then, based on the threshold, links between drugs are constructed. Other types of drug–drug similarity networks have also been used in the network data integration literature. A frequently considered one is the drug side-effect similarity (DSES) network. Namely, clinical side-effects of each drug have been collected and stored in numerous databases. The side-effects provide a drug with a profile and allow for construction of pairwise side-effect similarities between two drugs using the Jaccard index [117].

- — Protein similarity networks represent networks of proteins with similar sequences (PSeqS) [118] or structures (PStrS) [119]. Similarities between protein sequences are usually computed by using the BLAST algorithm [120], whereas the similarities between three-dimensional protein structures are usually computed by using the DaliLite algorithm [121].

Table 1.

Different types of biological data (the first two columns), types of biological entities and relations (interactions) between them (the second two columns) and databases containing the data (the last column).

| data type | network | entities/nodes | interactions/edges | data resource |

|---|---|---|---|---|

| molecular interactions | PPI | proteins | physical bonds | BioGRID [96] |

| MI | enzymes (proteins) | reaction catalysis | KEGG [97] | |

| DTI | drugs/targets | physical bonds | DrugBank [98], PubChem [46] | |

| functional associations | GI | genes (proteins) | genetic interactions | BioGRID [96] |

| GDA | genes/diseases | associations | OMIM [45], GWAS [99] PheWAS [100] |

|

| ON | GO (DO) term | hierarchical relations | GO [41], DO [42] | |

| functional/structural similarities | Co-Ex | genes | expression profile similarities | GEO [101], ArrayExpress [102], SMD [103] |

| DCS | drugs | structural similarities | DrugBank [98], PubChem [46] | |

| DSES | drugs | side-effect profile similarities | SIDER [104] | |

| PSeqS | proteins | protein sequence similarities | RefSeq [36] | |

| PStrS | proteins | structural similarities | PDB [43] |

Each of the above-described biological networks represents an important component of the system's cellular functioning and they often complement each other. For example, by comparing the number of common links between biological networks, many studies have reported a large overlap of the links between PPI and gene Co-Ex networks [122], whereas a small overlap of links has been observed between PPI and GI networks [123]. Hence, these studies have indicated that a GI network is a valuable complement to the other two biological networks and this has been confirmed in several network integration studies [55,61,66].

3. Computational methods for data integration

3.1. Types and strategies of data integration

Based on the type of data they integrate, integration methods can be divided into two types: homogeneous and heterogeneous integration methods (see table 2 for a detailed summary of the classification of methods into these types). Homogeneous integration deals with integration of networks with the same type of nodes (e.g. proteins), but different types of links between the nodes (e.g. GIs, PPIs, etc.). However, many biological data are heterogeneous, consisting of various types of biological entities and various types of relations. These data can be represented as collections of inter-related networks with various types of nodes and edges. For example, the GDA network along with the DCS network and the PPI network forms a heterogeneous network with multiple node and edge types. Heterogeneous data integration deals with a collective mining of these networks and the construction of a unified model.

The strategies for data integration can be divided into the following three categories (see table 2 for a detailed summary) [51,128,129,135–137]:

— Early (or full) data integration combines the datasets into a single dataset on which the data model is built. This often requires a transformation of the datasets into a common representation, which in some cases may result in information loss [51,135].

— Late (or decision) data integration builds models for each dataset separately, then it combines these models into a unified model. Building models from each dataset in isolation from others disregards their mutual relations, often resulting in reduced performance of the final model [128,135].

— Intermediate (or partial) data integration combines data through inference of a joint model. This strategy has often been preferred owing to its superior predictive accuracy reported in many studies (regardless of the chosen methods) [51,128,129,135,136], but there are some studies that report superiority of early and late integration strategy over the intermediate strategy [137]. This strategy does not require any data transformation and thus, it does not result in information loss.

3.2. Network-based methods

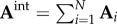

The majority of NB methods use very simple ways to integrate different types of network data and to create an integrated representation (or a model) of a set of networks. For example, in homogeneous network integration (see figure 2b for an illustration), where N different networks,  , with the same set of nodes, V, but different sets of links, Ei, are considered, a common way to construct an integrated network is by merging links of all networks over the same set of nodes (i.e.

, with the same set of nodes, V, but different sets of links, Ei, are considered, a common way to construct an integrated network is by merging links of all networks over the same set of nodes (i.e.  [66]. The adjacency matrix of the resulting integrated network is just a simple sum over the adjacency matrices representing individual networks:

[66]. The adjacency matrix of the resulting integrated network is just a simple sum over the adjacency matrices representing individual networks:  . In the case of weighted networks, the entries in the individual adjacency matrices, Ai, are scaled in the same range. However, this approach neglects the compatibility issues among individual networks in the construction of the integrated network. For example, by merging the links of modular3 and non-modular networks, the resulting network may not retain the modular structure. Other approaches that try to overcome this disadvantage create a weighted sum of adjacency matrices to construct the adjacency matrix of the merged network. Namely,

. In the case of weighted networks, the entries in the individual adjacency matrices, Ai, are scaled in the same range. However, this approach neglects the compatibility issues among individual networks in the construction of the integrated network. For example, by merging the links of modular3 and non-modular networks, the resulting network may not retain the modular structure. Other approaches that try to overcome this disadvantage create a weighted sum of adjacency matrices to construct the adjacency matrix of the merged network. Namely,  , where the weight wi ≥ 0 assigned to network i represents the contribution of network i to the quality of the inference4 (e.g. protein function prediction) on the merged network [34,124,138]. The weighting coefficients are obtained by solving a linear regression problem, which assigns lower weights to ‘less important’ networks. However, such weighting is problem-dependent, i.e. the structure of the resulting integrated network depends on the biological problem at study.

, where the weight wi ≥ 0 assigned to network i represents the contribution of network i to the quality of the inference4 (e.g. protein function prediction) on the merged network [34,124,138]. The weighting coefficients are obtained by solving a linear regression problem, which assigns lower weights to ‘less important’ networks. However, such weighting is problem-dependent, i.e. the structure of the resulting integrated network depends on the biological problem at study.

In heterogeneous network integration (see figure 2a for an illustration), the majority of studies have integrated networks containing different types of nodes and links by applying simple ‘projection’ methods [67,68,139]. Namely, they project network layers onto the one they are interested in. For example, in figure 2a, the GI network is projected onto the disease similarity network (DSN) by relating two diseases that have a gene in common. A weight of a link in the resulting disease–disease network represents the link multiplicity resulting from the projection. The disease–disease network is then further analysed by using standard NB methods. However, this projection method often results in information loss. Namely, by projecting networks onto a single node type network, the connectivity information of other node type networks is lost. That is, by projecting the gene–gene interaction network onto the disease–disease association network, the information about gene connections is lost along with the whole structure of the gene–gene interaction network. Therefore, by using these methods, we cannot analyse disease and gene connectivity patterns simultaneously.

More sophisticated methods capable of simultaneously analysing connectivity patterns of various networks are based on diffusion (information spreading across network links) over heterogeneous networks. They simultaneously explore the structure of each network and of their mutual relations; based on all this information, they create an integrated inference. Such approaches, also called network propagation methods, have been applied to biological problems, including gene–disease prioritization [69,72], drug–target prediction, drug repurposing [70,71] and drug–disease association prediction [73]. Although these approaches are mainly designed for a pair of inter-related networks, their further extensions to handle more networks are possible. For example, Huang et al. [73] extended the network propagation method to three inter-related networks. However, with the inclusion of multiple networks, the number of coupled iterative equations for information propagation (diffusion) grows and hence, the running time of the algorithm increases. Therefore, the scalability of these methods is limited.

3.3. Bayesian networks

BNs belong to the class of probabilistic graphical models that combine concepts from probability and graph theory to represent and model causal relations between random variables describing data [140]. A BN is a DAG, where nodes represent random variables (e.g. gene expression levels) and directed edges represent conditional probabilities between pairs of variables. For instance, a conditional probability distribution (CPD), between variables X and Y, denoted as p(X|Y), represents the probability of X given the value of Y. CPDs can model conditional dependencies between discrete or continuous variables, or a combination of both. For discrete variables, CPDs are given in the form of conditional probability tables containing values of probabilities that represent parameters of the model. For continuous variables, CPDs are usually modelled by using Gaussian distributions with the mean and standard deviation as model parameters (μ, σ). For example, CPD p(X|Y), with X being a continuous variable and Y being a discrete variable, can be represented as a set of parameters  , each for a different value of

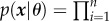

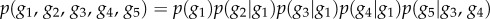

, each for a different value of  (i.e. (μi, σi) are the parameters of the Gaussian distribution p(x|yi)). BNs provide an elegant way to represent the structure of the data and their sparsity enables a compact representation and computation of the joint probability distribution (JPD) over the whole set of random variables. That is, the number of parameters to characterize the JPD is drastically reduced in the BN representation [80,140]; namely, a unique JPD of a BN containing n nodes (variables), x = (x1 , … , xn), can be formulated as:

(i.e. (μi, σi) are the parameters of the Gaussian distribution p(x|yi)). BNs provide an elegant way to represent the structure of the data and their sparsity enables a compact representation and computation of the joint probability distribution (JPD) over the whole set of random variables. That is, the number of parameters to characterize the JPD is drastically reduced in the BN representation [80,140]; namely, a unique JPD of a BN containing n nodes (variables), x = (x1 , … , xn), can be formulated as:

, where Pa(xi) denotes parents of variable xi and θ = (θ1 , … , θn) denotes the model parameters (i.e. θi = (μi,σi) denotes the set of parameters defining the CPD, p(xi|Pa(xi))).

, where Pa(xi) denotes parents of variable xi and θ = (θ1 , … , θn) denotes the model parameters (i.e. θi = (μi,σi) denotes the set of parameters defining the CPD, p(xi|Pa(xi))).

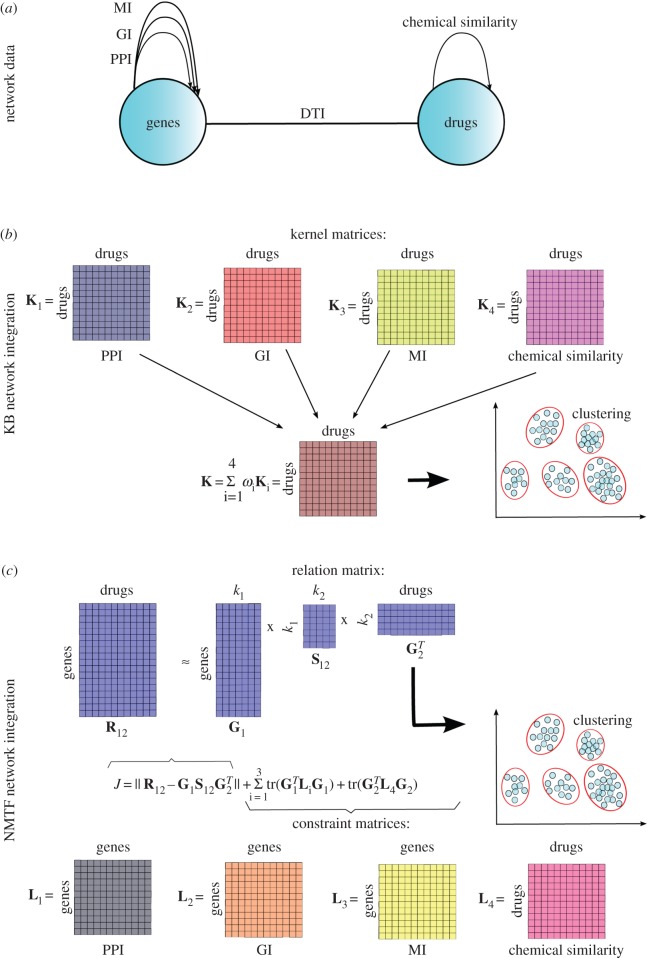

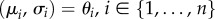

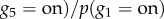

BNs have been applied to many tasks in systems biology, including modelling of protein signalling pathways [141], gene function prediction [54] and inference of cellular networks [142]. An illustration of a BN representing a GRN is shown in figure 3a. Each gene is represented by a variable denoting its expression. A state of each variable (gene expression) depends only on the states of its parents. This enables a factorization of a JPD into a product of CPDs describing each gene in terms of its parents.

Figure 3.

(a) A schematic illustration of a gene regulatory network modelled by BN. Genes are represented by nodes, whereas regulatory relations between genes are represented by directed edges. Gene g1 regulates the expression of genes g2, g3 and g4, and genes g3 and g4 regulate the expression of gene g5. Gene g1 is called a parent of g2, g3 and g4, whereas genes g2, g3 and g4 are called children of gene g1 (similar holds for other relations). A sparse representation implies that the expression level of a gene depends only on the expression levels of its regulators (parents in the network). The JPD of the system is  . (b) An example of a naive BN with a class node y being the parent to independent nodes x1, x2 , … , xN.

. (b) An example of a naive BN with a class node y being the parent to independent nodes x1, x2 , … , xN.

Constructing a BN describing the data consists of two steps: parameter learning and structure learning [80,140]. Because the number of possible structures of a BN grows super-exponentially with the number of nodes, the search for the BN that best describes the data is an NP-hard problem, and therefore heuristic (approximate) methods are used for solving it [143]. They usually start with some initial network structure and then gradually change it by adding, deleting or re-wiring some edges until the best scoring structure is obtained. For details about these and parameter estimation methods, we refer the reader to reference [80].

When the structure and parameters of a BN are learned (i.e. JPD is determined), an inference about dependencies between variables can be made. For example, assuming discrete values of variables describing genes as either expressed (on) or not (off) in figure 3a, we can ask what the likelihood of gene g5 being expressed is, given that gene g1 is expressed. This can be formulated as

, where the numerator can be calculated by using the marginalization rule (i.e. by summing over all unknown (marginal) variables considering their possible values) [140]:

, where the numerator can be calculated by using the marginalization rule (i.e. by summing over all unknown (marginal) variables considering their possible values) [140]:

For large systems, with large numbers of variables, this summation becomes computationally intractable: the exact inference, or the summation of JPD over all possible values of unknown variables, is known to be an NP-hard problem [144]. Consequently, many approximation methods, such as variational methods and sampling methods, have been proposed [140].

For large systems, with large numbers of variables, this summation becomes computationally intractable: the exact inference, or the summation of JPD over all possible values of unknown variables, is known to be an NP-hard problem [144]. Consequently, many approximation methods, such as variational methods and sampling methods, have been proposed [140].

Recently, BNs have been used as a suitable framework for integration and modelling of various types of biological data. One of the biggest challenges in systems biology is a problem of network inference from disparate data sources—the construction of sparse networks where only important gene associations are present (strength of associations are represented by conditional probabilities) [74]. Disparate data sources can be incorporated in either one of two steps of BN construction—parameter learning or structure learning. Such networks play an important role in describing and predicting complex behaviour of a system supported by evidence from a variety of different biological data [145].

For example, Zhu et al. [125] combined gene expression data (GExD), expression of quantitative trait loci (eQTL),5 transcription factor binding site (TFBS) and PPI data to construct a causal, probabilistic network of yeast. In particular, they used the eQTL data to constrain the addition of edges in the probabilistic network, so that cis-eQTL acting genes are considered to be parents of trans-eQTL acting genes. They tested the performance of the constructed BN in predicting GO categories and they demonstrated that the predictive power of the integrated BN is significantly higher than that of the BN constructed solely from the gene expression data [125]. A similar procedure has also been applied by Zhang et al. [126], who constructed a gene-regulatory network by integrating data from brain tissues of late-onset Alzheimer's disease patients.

One of the first studies that integrated clinical and patient-specific omics data was presented by Gevaert et al. [128]. They integrated the gene expression data of tissues from breast cancer patients whose clinical outcome was known. They constructed the BN with genes and outcome variable (representing clinical data) as nodes and used it for a classification task: they classified patients into good and poor prognosis groups. They compared the performance of BN in reproducing the known outcomes in three different strategies, early, late and intermediate (see §3.1). They showed that the intermediate strategy was the most accurate one. A similar conclusion was drawn by van Vilet et al. [129].

Most studies have used the simplest BN, the so-called naive BN, for combining multiple heterogeneous biological data and constructing an integrated gene–gene association network (also called an FLN) [35,50,127,147]. The structure of a naive BN consists of a class node as a parent to all other independent nodes representing different data sources. Such a simple BN structure enables a much faster learning and inference. For example, in gene–gene association prediction, the class node may represent a set of interacting or non-interacting proteins, whereas the other variables in the naive BN represent input biological data, often in pairwise format (see §2). In addition to the input data, a gold standard data (e.g. gene pairs with known functional relations, usually from GO) is used for learning, i.e. for constructing the probability distributions. The basic assumption of a naive BN is that different data sources are conditionally independent, i.e. that the information in the datasets are independent given that a gene pair is either functionally associated or not.

Although simple, naive BNs have yielded good results in many data integration studies. They were initially proposed for data integration by Troyanskaya et al. [54], who developed a framework called multi-source association of genes by integration of clusters (MAGIC) for gene function prediction. They integrated systems-level PPI and GI data along with GExD and TFBS data of Saccharomyces cerevisiae. By using GO as the gold standard, they demonstrated an increased accuracy of their method applied on all datasets, compared with its performance on each input dataset separately. Furthermore, naive BNs have demonstrated usability in patient-specific data integration. Namely, a recent study used a naive BN to integrate gene expression and CNV6 data of prostate and breast cancer patients [130]. Unlike other methods, this method successfully detected a new subtype of prostate cancer patients with extremely poor survival outcome. Moreover, unlike many data integration studies that force incompatible data types to be fused, this is the first study that systematically considered compatibility of input data sources (see challenge 4 in §1.2). That is, the method was able to distinguish between concordant and discordant signals within each patient sample.

BNs are a good framework for biological network integration because of their ability to capture noisy conditional dependence between data variables through the construction of CPDs. However, they also have several disadvantages: (i) in the network inference problems, their sparse representation captures only important associations, whereas other associations are discarded; (ii) their acyclic representation cannot be used for modelling networks with loops, which are important in many biological networks, as they represent control mechanisms; (iii) the most important limitation of BNs is computational: as mentioned earlier, learning and inference processes of BNs are computationally intractable on large data, which is a major reason why many studies focus only on a small subset of nodes (genes) when constructing a BN.

3.4. Kernel-based methods

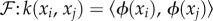

KB methods belong to the class of statistical ML methods used for data pattern analysis, e.g. for learning tasks, such as clustering, classification, regression, correlation, feature selection, etc. They work by mapping the original data to a higher dimensional space, called the feature space, in which the pattern analysis is performed. Such a mapping is represented by a kernel matrix [148]. A kernel matrix, K, is a symmetric, positive semi-definite matrix7 with entries Ki,j = k(xi, xj) representing similarities between all pairs of data points, xi, xj. The similarity between two data points k(xi,

xj) is computed as an inner product between their representations, ϕ(xi), ϕ(xj), in the feature space,  , where, ϕ maps data points from the input space,

, where, ϕ maps data points from the input space,  , to the feature space

, to the feature space  (a vector space where data points are represented as vectors), i.e.

(a vector space where data points are represented as vectors), i.e.  [79,148]. Function k(xi, xj) is called a kernel function and its explicit definition is the only requirement of the kernel method, whereas the mapping function ϕ and the properties of the feature space

[79,148]. Function k(xi, xj) is called a kernel function and its explicit definition is the only requirement of the kernel method, whereas the mapping function ϕ and the properties of the feature space  do not need to be explicitly specified. For example, given a set of proteins and their amino acid sequences, entries in the kernel matrix are usually generated by using the BLAST pairwise sequence alignment algorithm [120]. Hence, computing the protein embedding function, ϕ, and constructing the feature space,

do not need to be explicitly specified. For example, given a set of proteins and their amino acid sequences, entries in the kernel matrix are usually generated by using the BLAST pairwise sequence alignment algorithm [120]. Hence, computing the protein embedding function, ϕ, and constructing the feature space,  , is not necessary. Other kernel functions for measuring similarities between two proteins include the spectrum kernel [150,151], the motif kernel [152] and the Pfam kernel [153]. In addition to string data, molecular network data are frequently used in KB integration studies. Molecular networks are usually represented by diffusion kernels, which encode similarities between the nodes of the network [154], defined as:

, is not necessary. Other kernel functions for measuring similarities between two proteins include the spectrum kernel [150,151], the motif kernel [152] and the Pfam kernel [153]. In addition to string data, molecular network data are frequently used in KB integration studies. Molecular networks are usually represented by diffusion kernels, which encode similarities between the nodes of the network [154], defined as:  , where β > 0 is the parameter that quantifies the degree of the diffusion and L is the network Laplacian (see §2 for further details). The elements of the diffusion kernel matrix, Kij, quantify the closeness between nodes i and j in the network.

, where β > 0 is the parameter that quantifies the degree of the diffusion and L is the network Laplacian (see §2 for further details). The elements of the diffusion kernel matrix, Kij, quantify the closeness between nodes i and j in the network.

The methods using kernel matrices of data include: support vector machines (SVMs) [155], principal component analysis (PCA) [156], canonical correlation analysis (CCA) [157]. SVM classifiers have frequently been used for prediction tasks in computational biology owing to their high accuracy [158]. They were originally used for binary classification problems and can be defined as follows: given two classes of data items, a positive and a negative class labelled as  , and a dataset consisting of n classified data points (xi, yi), where data point xi belongs to a class yi, a classification task consists of correctly predicting a class membership of a new, unlabelled data point, xnew [159]. This can be formulated as an optimization problem: given the kernel matrix constructed between all pairs of data points, K(xi, xj), and labels yi, construct a hyperplane in the high-dimensional feature space that separates –1 class from +1 class by maximizing the margin, that is, the distance from the hyperplane to any data point from any class. Having obtained the hyperplane, the membership of a new data point is then easily predicted by localizing the data point in the feature space with respect to the separating hyperplane. For a more detailed description and application of this method, we refer the reader to review papers [160,161].

, and a dataset consisting of n classified data points (xi, yi), where data point xi belongs to a class yi, a classification task consists of correctly predicting a class membership of a new, unlabelled data point, xnew [159]. This can be formulated as an optimization problem: given the kernel matrix constructed between all pairs of data points, K(xi, xj), and labels yi, construct a hyperplane in the high-dimensional feature space that separates –1 class from +1 class by maximizing the margin, that is, the distance from the hyperplane to any data point from any class. Having obtained the hyperplane, the membership of a new data point is then easily predicted by localizing the data point in the feature space with respect to the separating hyperplane. For a more detailed description and application of this method, we refer the reader to review papers [160,161].

Kernel matrices represent a principled framework for representing different types of data including strings, sets, vectors, time series and graphs [79]. This property provides the KB methods with an advantage over the BN-based methods, as the BN-based methods are mostly suited to pairwise datasets owing to easier construction of conditional probabilities in that case. However, choosing the ‘right’ kernel function is not straightforward. Therefore, instead of constructing a single kernel, usually multiple candidate kernels for a single dataset are constructed using different measures of similarity [162]. These kernels are then linearly combined into one kernel that is then used for further analysis. Such an approach is called a multiple kernel learning (MKL) and has been shown to lead to a better performance than single KB methods, especially in genomic data analysis [163]. The mathematical basis for this approach is in the closure property of kernel matrices [79]. Namely, all elementary mathematical operations (e.g. addition, subtraction, multiplication) between two kernel matrices do not change the property of the final matrix (i.e. the positive semi-definite property stays preserved). This property provides the foundation for using KB methods for data integration by MKL. Namely, given a set of kernel matrices, {K1 , … , Kn}, representing n different datasets, the single kernel matrix representing integrated data is obtained by a linear combination,  , where non-negative coefficients, ωi, determine the weights of each dataset and they are obtained through an optimization procedure of the KB method [52,164]. Namely, the non-negativity constraint imposed on weights reduces the optimization problem to a quadratically constrained quadratic problem [51]. The solution of this optimization problem gives the weighting coefficients. Thus, the obtained weights, ωi, allow us to distinguish between more and less informative datasets. Note that all kernel matrices, Ki, representing different datasets, need to be constructed over the same feature space to be correctly combined. In the case of heterogeneous data integration, this often requires data to be transformed, or projected onto the same feature space, which often results in information loss. This is the biggest drawback of KB data integration methods.

, where non-negative coefficients, ωi, determine the weights of each dataset and they are obtained through an optimization procedure of the KB method [52,164]. Namely, the non-negativity constraint imposed on weights reduces the optimization problem to a quadratically constrained quadratic problem [51]. The solution of this optimization problem gives the weighting coefficients. Thus, the obtained weights, ωi, allow us to distinguish between more and less informative datasets. Note that all kernel matrices, Ki, representing different datasets, need to be constructed over the same feature space to be correctly combined. In the case of heterogeneous data integration, this often requires data to be transformed, or projected onto the same feature space, which often results in information loss. This is the biggest drawback of KB data integration methods.

KB methods have first been proposed as a technique for data integration by Lanckriet et al. [51]. In that paper, they trained a 1-norm soft margin8 SVM to classify proteins into membrane or ribosomal groups. They constructed seven different kernels representing three different types of data: amino acid sequences, gene expression and PPI data. They showed that the performance of their classifier is the highest when all datasets are taken into account. A similar approach was applied for function prediction of baker's yeast proteins [52] and demonstrated that an SVM classifier trained on all data performs better than a classifier trained on any single type of data.

KB methods have also demonstrated their power in integrating molecular, structural and phenotypic data for drug repurposing. A recent study integrates three different layers of information represented in a drug-centred feature space [63]. Their kernel matrices represent (i) drug chemical similarities based on their structures (see §2 for details about measures of chemical similarity); (ii) drug similarities based on the positions of their targets in the PPI network; and (iii) drug similarities based on the correlations between gene profiles under the drug influence. Based on the combination of these three kernel matrices and the existing drug classification, the authors trained the classifier and proposed the top misclassified drugs as new candidates for repurposing [63]. In a similar study, a method called PreDR (predict drug repurposing) for predicting novel drug–disease associations was proposed. An SVM classier was trained on integrated kernel matrices of drugs based on their chemical structure, target proteins and side-effect profiles [131].

Although SVM methods are the most popular KB methods for regression and classification tasks in computational biology, other learning methods can also be applied on kernel matrices for performing different tasks. For example, a special application of KB methods (as well as of BN methods) is in the network inference problem. Unlike BN methods, which reconstruct the whole network without any prior knowledge about its structure, KB methods assume that a part of the network is known. For instance, Kato et al. [132] use a small part of the PPI network and the three different types of protein data to complete the whole PPI network. Namely, they construct a small, incomplete kernel matrix representing a known part of the PPI network and define kernel matrix completion problem that uses a weighted combination of three kernel matrices representing similarities between gene expression profiles, phylogenetic profiles (PhylProf) and amino acid sequences to complete the small kernel matrix. The authors report the high accuracy of their method in the reconstruction of the PPI network, and metabolic networks, and outline the ability of the method to selectively integrate different biological data. Another study uses the kernel CCA method to infer the PPI network and to identify features indicative of PPIs by detecting correlations between heterogeneous datasets (gene expression, protein localization and phylogenetic profiles) and the PPI network [133]. The inference process is done in a supervised manner, as it uses part of the true protein network as the gold standard.

Along with BN methods, KB methods can also be used for integration of clinical data with genomic data. An example study that integrates gene expression and clinical data of breast cancer patients is demonstrated by Daement et al. [134]. They applied a least-square SVM [165] on the combination of two patient-centric kernel matrices: the first was constructed based on patients' gene expression similarities, whereas the second was constructed based on similarities between patients' clinical variables. The authors reported 70% accuracy of their approach to correctly classify patients according to the appearance of distant subclinical metastases based on the primary tumour. They also demonstrated that both datasets, the expression and the clinical data, contribute to the performance of the classification.

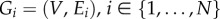

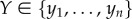

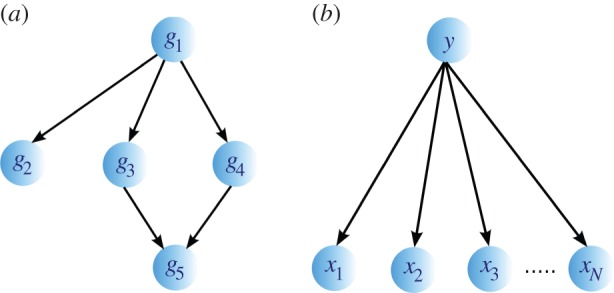

From these examples, we can see that data integration by using KB methods has several advantages. The main advantage is that they can integrate a wide range of data types. Moreover, a liner combination of kernels provides a selective way of accounting for datasets, by assigning lower weights to less informative and noisier datasets. Hence, this integration method meets challenges 2 and 3 listed in §1.2. However, a big disadvantage is that heterogeneous datasets need to be transformed into a common feature space to be properly integrated, which can lead to information loss (see example shown in figure 4a,b). In the case of heterogeneous networks, data transformation prevents modelling of multi-relational data, i.e. a simultaneous modelling of different types of relations in the data.

Figure 4.

(a) Heterogeneous networks of genes (PPI, GI and MI) and drugs (chemical similarities) and links between drugs and genes (DTI). Intertype relations are represented by drug–target interaction (DTI) network, whereas intratype connections are represented by four networks: protein–protein interaction (PPI), genetic interaction (GI) and metabolic interaction (MI) molecular networks of genes, and the chemical similarity network of drugs (see §2 for further details about these networks and their construction). (b) An illustration of a KB data integration method for drug clustering. All kernel matrices are expressed in the drug similarity feature space based on the closeness between their targets (proteins) in each molecular network (K1, K2 and K3) and based on the similarity between their chemical structures (K4). All kernel matrices are linearly combined into a resulting kernel matrix K, on which the drug clustering is performed by using KB clustering methods. (c) An illustration of an NMTF-based data integration method for drug clustering: factorization of the DTI relation matrix under the guidance of molecular and chemical connectivity constraints represented by the constraint matrices. Drugs are assigned to clusters based on the entries in obtained G2 cluster indicator matrix.

3.5. Non-negative matrix factorization

NMF is an ML method commonly used for dimensionality reduction and clustering problems. It aims to find two low-dimensional, non-negative matrices,  and

and  , whose product provides a good approximation of the input non-negative data matrix,

, whose product provides a good approximation of the input non-negative data matrix,  , i.e. X ≈ UV. The method was originally introduced by Lee & Seung [166] for parts-based decomposition of images. The non-negativity constraints provide matrix factors, U and V, with a more meaningful interpretation than previous approaches, such as PCA [156] and vector quantization [167], whereas the choice of parameter k ≪ min{n1, n2} (also called the rank parameter) provides a dimensionality reduction [168].

, i.e. X ≈ UV. The method was originally introduced by Lee & Seung [166] for parts-based decomposition of images. The non-negativity constraints provide matrix factors, U and V, with a more meaningful interpretation than previous approaches, such as PCA [156] and vector quantization [167], whereas the choice of parameter k ≪ min{n1, n2} (also called the rank parameter) provides a dimensionality reduction [168].

In recent years, there has been a significant increase in the number of studies using NMF owing to it being a relaxed form of K-means clustering, one the most widely used unsupervised learning algorithms [169]. Namely, NMF can be applied to clustering as follows: a set of n data points represented by d-dimensional vectors can be placed into columns of a d × n data matrix X. This matrix is then approximately factorized into two non-negative matrices, U and V, where matrix  is the cluster indicator matrix, that is, based on its entries, n data points are assigned to k clusters, whereas U is the basis matrix. In particular, each data point, j, is assigned to cluster, i, if Vij is the maximum value in column j of matrix V. This procedure is called a hard clustering, as each data point belongs to exactly one cluster [170]. For recent advances on using NMF methods for other clustering problems, we refer the reader to a recent book chapter [171].

is the cluster indicator matrix, that is, based on its entries, n data points are assigned to k clusters, whereas U is the basis matrix. In particular, each data point, j, is assigned to cluster, i, if Vij is the maximum value in column j of matrix V. This procedure is called a hard clustering, as each data point belongs to exactly one cluster [170]. For recent advances on using NMF methods for other clustering problems, we refer the reader to a recent book chapter [171].

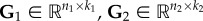

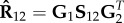

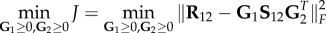

The NMF method has found applications in many areas, including computer vision [166,172], document clustering [173,174], signal processing [175,176], bioinformatics [57,177,178], recommendation systems [179,180] and social sciences [181,182]. This is due to the fact that NMF can cover nearly all categories of ML problems. Nevertheless, the biggest application comes with the extension of NMF to heterogeneous data. Namely, the above-described NMF can only be used for homogeneous data clustering. Therefore, the formalism was further extended by Ding et al. [183] to co-cluster heterogeneous data by defining non-negative matrix tri-factorization (NMTF). Given a data matrix, R12, encoding relations between two sets of objects of different types (e.g. adjacency matrix of DTI bipartite network representing interactions between n1 genes and n2 drugs, see example in figure 4a,c), NMTF, decompose matrix  into three non-negative matrix factors as follows:

into three non-negative matrix factors as follows:  , where

, where  are the cluster indicator matrices of the first and the second dataset, respectively, and

are the cluster indicator matrices of the first and the second dataset, respectively, and  is a low-dimensional representation of the initial matrix. In analogy with NMF method, rank parameters k1 and k2 correspond to numbers of clusters in the first and the second dataset. In addition to co-clustering, NMTF can also be used for matrix completion [184]. Namely, after obtaining low-dimensional matrix factors, the reconstructed data matrix

is a low-dimensional representation of the initial matrix. In analogy with NMF method, rank parameters k1 and k2 correspond to numbers of clusters in the first and the second dataset. In addition to co-clustering, NMTF can also be used for matrix completion [184]. Namely, after obtaining low-dimensional matrix factors, the reconstructed data matrix

is more complete than the initial data matrix, R12, featuring new entries, unobserved in the data, that emerged from the latent structure captured by the low-dimensional matrix factors. Therefore, NMTF provides a unique approach for modelling multi-relational heterogeneous network data and predicting new, previously unobserved links.

is more complete than the initial data matrix, R12, featuring new entries, unobserved in the data, that emerged from the latent structure captured by the low-dimensional matrix factors. Therefore, NMTF provides a unique approach for modelling multi-relational heterogeneous network data and predicting new, previously unobserved links.

The problem of finding optimal low-rank non-negative matrices whose product is equal to the initial data matrix is known to be NP-hard [185]. Thus, heuristic algorithms for finding approximate solutions have been proposed [186]. They involve solving an optimization problem that minimizes the distance between the input data matrix and the product of low-dimensional matrix factors. The most common measure of the distance used in construction of the objective (cost) function is the Frobenius norm (also called the Euclidean norm) [149]. Hence, the objective function to be minimized can be defined as follows  . Note that it is not necessary to impose the non-negativity constraint to the S12 matrix, as only the non-negativity of G1 and G2 is required for co-clustering problems. This is also known as a semi-NMTF problem [187]. Low-dimensional matrix factors, G1, G2 and S12, are computed by using iterative update rules derived by applying standard procedures from constrained optimization theory [188]. These update rules ensure decreasing behaviour of the objective function, J, over iterations. The most popular rules are multiplicative update rules, which preserve the non-negative property of the matrix factors through update iterations. They start with randomly initialized matrix factors and iteratively update them until the convergence criterion is met [183,189]. For more details about the convergence criterion, other update rules and initialization strategies, we refer the reader to references [186,190].

. Note that it is not necessary to impose the non-negativity constraint to the S12 matrix, as only the non-negativity of G1 and G2 is required for co-clustering problems. This is also known as a semi-NMTF problem [187]. Low-dimensional matrix factors, G1, G2 and S12, are computed by using iterative update rules derived by applying standard procedures from constrained optimization theory [188]. These update rules ensure decreasing behaviour of the objective function, J, over iterations. The most popular rules are multiplicative update rules, which preserve the non-negative property of the matrix factors through update iterations. They start with randomly initialized matrix factors and iteratively update them until the convergence criterion is met [183,189]. For more details about the convergence criterion, other update rules and initialization strategies, we refer the reader to references [186,190].

Note that the NMF optimization problems belong to the group of non-convex optimization problems (i.e. the objective function, J, is a non-convex function of its variables) [186]. Unlike convex optimization problems, which are characterized by the global minimum solution and whose algorithms scale well with the problem size [191], non-convex optimization problems face a range of difficulties, including finding the global minimum (and thus the unique solution) and a very slow convergence to a local minimum. Nevertheless, even a local minimum solution of NMF has been shown to have meaningful properties in many data mining applications [186]. Using this method for data integration is based on penalized non-negative matrix tri-factorization (PNMTF), which was originally designed for co-clustering heterogeneous relational data [192,193]. Applicability of PNMTF to data integration problems comes from the fact that it can easily be extended to any number, N, of datasets mutually related by relation matrices

Rij (e.g. sets of genes, drugs, diseases, etc.) [135], where indices, i ≠ j, 1 ≤ i, j ≤ N, denote different datasets. The relation matrices are simultaneously decomposed into low-dimensional factors, Gi, Gj and Sij, within the same optimization function. The key ingredients of this approach are low-dimensional factors, Gi, 1 ≤ i ≤ N, that are shared across the decomposition of all relation matrices, ensuring the influence of all datasets on the resulting model. For example, matrix G3 is shared in the decomposition of all relation matrices Ri3 and R3j,  , and therefore, the clustering assignment obtained from matrix G3 is influenced by all datasets represented by these relation matrices. Similarly, for instance, the reconstruction of matrix

, and therefore, the clustering assignment obtained from matrix G3 is influenced by all datasets represented by these relation matrices. Similarly, for instance, the reconstruction of matrix  is influenced by all datasets represented by matrices Rij, i ≠ 2 and j ≠ 3, whose factorizations include either matrix G2 or G3.

is influenced by all datasets represented by matrices Rij, i ≠ 2 and j ≠ 3, whose factorizations include either matrix G2 or G3.

Moreover, the method can further be extended as a semi-supervised method that incorporates additional, prior information into the objective function to guide the co-clustering. Namely, in many studies, the datasets itself can have their internal structures represented by networks. For example, in figure 4a,c, in addition to intertype drug–gene relations represented by relation matrix R12, both datasets, drugs and genes are characterized by intratype connections represented by different networks, molecular networks connecting genes and a chemical similarity network connecting drugs. These connections are encoded in the form of Laplacian matrices, Li, and they are incorporated into the objective function as constraints (hence the name constraint matrices) to guide the co-clustering, by enforcing two connected drugs or genes to belong to the same cluster. For instance, the last two terms in the formula displayed in figure 4c represent penalty terms through which these constraint matrices are incorporated into the objective function. These terms are also known as graph regularization terms [194,195]. For more details about the construction of the objective function and derivation of the multiplicative update rules, we refer the reader to references [135,192,193].