-

Notifications

You must be signed in to change notification settings - Fork 11

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

- Loading branch information

Showing

9 changed files

with

2,442 additions

and

0 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,56 @@ | ||

| <p align="center"> | ||

| <img src="https://raw.githubusercontent.com/yuweihao/misc/master/MM-Vet/mm-vet-v2_logo.jpg" width="400"> <br> | ||

| </p> | ||

|

|

||

|

|

||

| # [MM-Vet v2: A Challenging Benchmark to Evaluate Large Multimodal Models for Integrated Capabilities](https://arxiv.org/abs/xxxx.xxxxx) | ||

|

|

||

| <p align="center"> | ||

| [<a href="https://arxiv.org/abs/xxxx.xxxxx">Paper</a>] | ||

| [<a href="https://github.com/yuweihao/MM-Vet/releases/download/v2/mm-vet-v2.zip">Download Dataset</a>] | ||

| [<a href="https://paperswithcode.com/sota/visual-question-answering-on-mm-vet-v2"><b>Leaderboard</b></a>] | ||

| [<a href="https://huggingface.co/spaces/whyu/MM-Vet-v2_Evaluator">Online Evaluator</a>] | ||

| </p> | ||

|

|

||

|

|

||

|

|

||

|  | ||

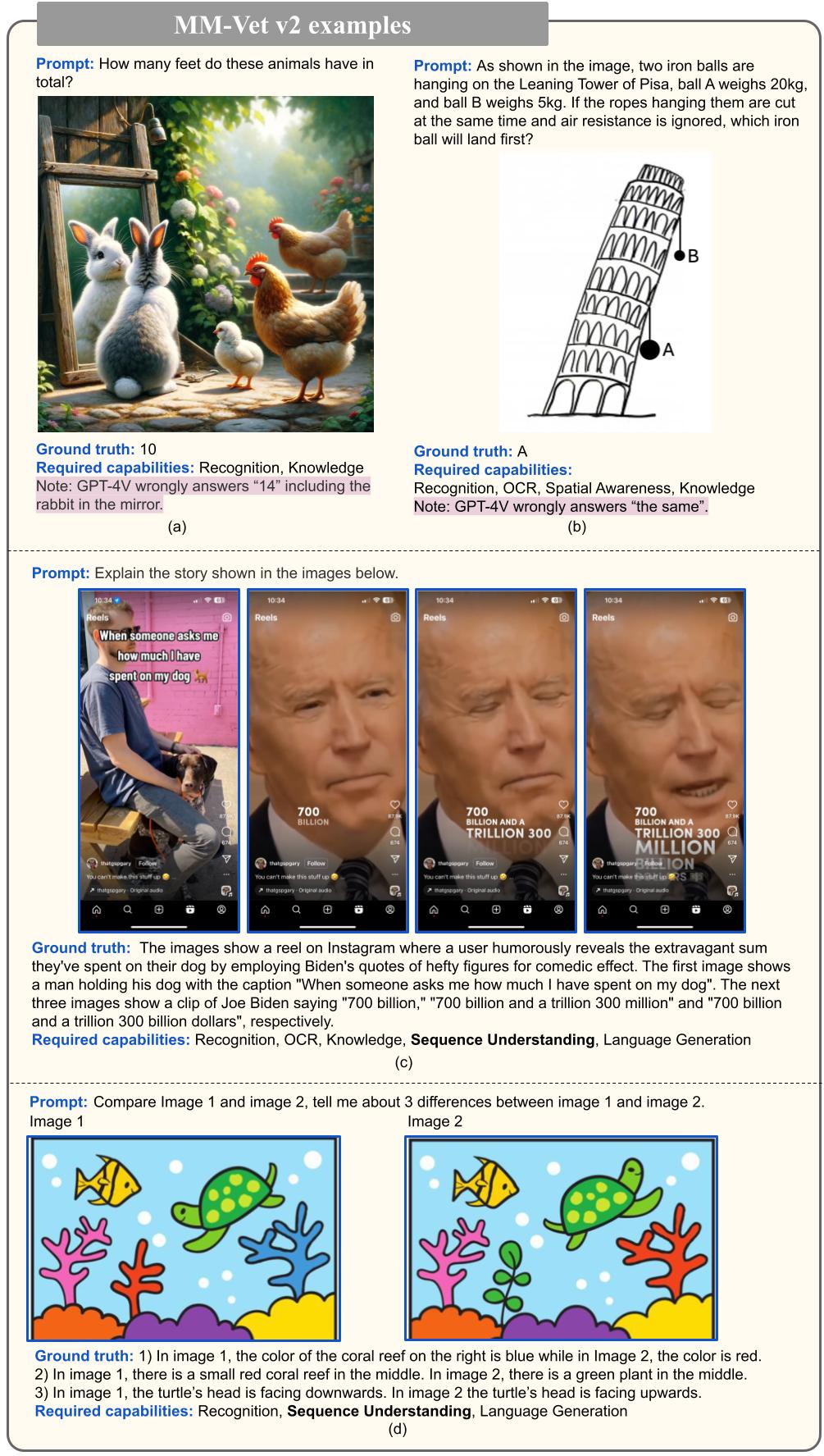

| Figure 1: Four examples from MM-Vet v2. Compared with MM-Vet, MM-Vet v2 introduces more high-quality evaluation samples (e.g., (a) and (b)), and the ones with the new capability of image-text sequence understanding (e.g., (c) and (d)). | ||

|

|

||

| ## Evalute your model on MM-Vet v2 | ||

| **Step 0**: Install openai package with `pip install openai>=1` and get access GPT-4 API. If you have not access, you can try MM-Vet v2 online evaluator [Hugging Face Space](https://huggingface.co/spaces/whyu/MM-Vet-v2_Evaluator) (but it may wait for long time depending on number of users). | ||

|

|

||

| **Step 1**: Download MM-Vet v2 data [here](https://github.com/yuweihao/MM-Vet/releases/download/v2/mm-vet-v2.zip) and unzip `unzip mm-vet-v2.zip`. | ||

|

|

||

| **Step 2**: Infer your model on MM-Vet v2 and save your model outputs in json like [gpt-4o-2024-05-13_detail-high.json](results/gpt-4o-2024-05-13_detail-high.json), or just use [gpt-4o-2024-05-13_detail-high.json](results/gpt-4o-2024-05-13_detail-high.json) as example to evaluate. We also release inference scripts for GPT-4, Claude and Gemini. | ||

|

|

||

| ```bash | ||

| image_detail=high # or auto, low refer to https://platform.openai.com/docs/guides/vision/low-or-high-fidelity-image-understanding | ||

|

|

||

| python inference/gpt4.py --mmvetv2_path /path/to/mm-vet-v2 --model_name gpt-4o-2024-05-13 --image_detail ${image_detail} | ||

| ``` | ||

|

|

||

| ```bash | ||

| python inference/claude.py --mmvetv2_path /path/to/mm-vet-v2 --model_name claude-3-5-sonnet-20240620 | ||

| ``` | ||

|

|

||

| ```bash | ||

| python inference/gemini.py --mmvetv2_path /path/to/mm-vet-v2 --model_name gemini-1.5-pro | ||

| ``` | ||

|

|

||

| **Step 3**: `git clone https://github.com/yuweihao/MM-Vet.git && cd MM-Vet/v2`, run LLM-based evaluator | ||

| ```bash | ||

| python mm-vet-v2_evaluator.py --mmvetv2_path /path/to/mm-vet-v2 --result_file results/gpt-4o-2024-05-13_detail-high.json | ||

| ``` | ||

| If you cannot access GPT-4 (gpt-4-0613), you can upload your model output results (json file) to MM-Vet v2 online evaluator [Hugging Face Space](https://huggingface.co/spaces/whyu/MM-Vet-v2_Evaluator) to get the grading results. | ||

|

|

||

|

|

||

| ## Citation | ||

| ``` | ||

| @article{yu2024mmvetv2, | ||

| title={MM-Vet v2: A Challenging Benchmark to Evaluate Large Multimodal Models for Integrated Capabilities}, | ||

| author={Weihao Yu and Zhengyuan Yang and Lingfeng Ren and Linjie Li and Jianfeng Wang and Kevin Lin and Chung-Ching Lin and Zicheng Liu and Lijuan Wang and Xinchao Wang}, | ||

| journal={arXiv preprint arXiv:xxxx.xxxxx}, | ||

| year={2024} | ||

| } | ||

| ``` |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,146 @@ | ||

| import json | ||

| import time | ||

| import os | ||

| import base64 | ||

| import requests | ||

| import argparse | ||

| import anthropic | ||

|

|

||

|

|

||

| # Function to encode the image | ||

| def encode_image(image_path): | ||

| with open(image_path, "rb") as image_file: | ||

| return base64.b64encode(image_file.read()).decode('utf-8') | ||

|

|

||

|

|

||

| class Claude: | ||

| def __init__(self, api_key, | ||

| model="claude-3-5-sonnet-20240620", temperature=0.0, | ||

| max_tokens=512, system=None): | ||

| self.model = model | ||

| self.client = anthropic.Anthropic( | ||

| api_key=api_key, | ||

| ) | ||

| self.system = system | ||

| self.temperature = temperature | ||

| self.max_tokens = max_tokens | ||

|

|

||

| def get_response(self, image_folder, prompt="What's in this image?"): | ||

| messages = [] | ||

| content = [] | ||

| queries = prompt.split("<IMG>") | ||

| img_num = 0 | ||

| for query in queries: | ||

| query = query.strip() | ||

| if query == "": | ||

| continue | ||

| if query.endswith((".jpg", ".png", ".jpeg")): | ||

| image_path = os.path.join(image_folder, query) | ||

| base64_image = encode_image(image_path) | ||

| image_format = "png" if image_path.endswith('.png') else "jpeg" | ||

| content.append( | ||

| { | ||

| "type": "image", | ||

| "source": { | ||

| "type": "base64", | ||

| "media_type": f"image/{image_format}", | ||

| "data": base64_image, | ||

| } | ||

| } | ||

| ) | ||

| img_num += 1 | ||

| else: | ||

| content.append( | ||

| { | ||

| "type": "text", | ||

| "text": query | ||

| }, | ||

| ) | ||

|

|

||

| messages.append({ | ||

| "role": "user", | ||

| "content": content, | ||

| }) | ||

|

|

||

| payload = { | ||

| "model": self.model, | ||

| "messages": messages, | ||

| "max_tokens": self.max_tokens, | ||

| "temperature": self.temperature, | ||

| } | ||

|

|

||

| if self.system: | ||

| payload["system"] = self.system | ||

|

|

||

| response = self.client.messages.create(**payload) | ||

| response_text = response.content[0].text | ||

| return response_text | ||

|

|

||

|

|

||

| def arg_parser(): | ||

| parser = argparse.ArgumentParser() | ||

| parser.add_argument( | ||

| "--mmvetv2_path", | ||

| type=str, | ||

| default="/path/to/mm-vet-v2", | ||

| help="Download mm-vet.zip and `unzip mm-vet.zip` and change the path here", | ||

| ) | ||

| parser.add_argument( | ||

| "--result_path", | ||

| type=str, | ||

| default="results", | ||

| ) | ||

| parser.add_argument( | ||

| "--anthropic_api_key", type=str, default=None, | ||

| help="refer to https://platform.openai.com/docs/quickstart?context=python" | ||

| ) | ||

| parser.add_argument( | ||

| "--model_name", | ||

| type=str, | ||

| default="claude-3-5-sonnet-20240620", | ||

| help="Claude model name", | ||

| ) | ||

| args = parser.parse_args() | ||

| return args | ||

|

|

||

|

|

||

| if __name__ == "__main__": | ||

| args = arg_parser() | ||

| model_name = args.model_name | ||

| if os.path.exists(args.result_path) is False: | ||

| os.makedirs(args.result_path) | ||

| results_path = os.path.join(args.result_path, f"{model_name}.json") | ||

| image_folder = os.path.join(args.mmvetv2_path, "images") | ||

| meta_data = os.path.join(args.mmvetv2_path, "mm-vet-v2.json") | ||

|

|

||

|

|

||

| if args.anthropic_api_key: | ||

| ANTHROPIC_API_KEY = args.anthropic_api_key | ||

| else: | ||

| ANTHROPIC_API_KEY = os.getenv('ANTHROPIC_API_KEY') | ||

|

|

||

| if ANTHROPIC_API_KEY is None: | ||

| raise ValueError("Please set the ANTHROPIC_API_KEY environment variable or pass it as an argument") | ||

|

|

||

| claude = Claude(ANTHROPIC_API_KEY, model=model_name) | ||

|

|

||

| if os.path.exists(results_path): | ||

| with open(results_path, "r") as f: | ||

| results = json.load(f) | ||

| else: | ||

| results = {} | ||

|

|

||

| with open(meta_data, "r") as f: | ||

| data = json.load(f) | ||

|

|

||

| for id in data: | ||

| if id in results: | ||

| continue | ||

| prompt = data[id]["question"].strip() | ||

| print(id) | ||

| print(f"Prompt: {prompt}") | ||

| response = claude.get_response(image_folder, prompt) | ||

| print(f"Response: {response}") | ||

| results[id] = response | ||

| with open(results_path, "w") as f: | ||

| json.dump(results, f, indent=4) |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,94 @@ | ||

| import os | ||

| import time | ||

| from pathlib import Path | ||

| import argparse | ||

| import json | ||

| import google.generativeai as genai | ||

| from utils import evaluate_on_mmvetv2 | ||

|

|

||

|

|

||

| class Gemini: | ||

| def __init__(self, model="gemini-1.5-pro"): | ||

| self.model = genai.GenerativeModel(model) | ||

|

|

||

| def get_response(self, image_folder, prompt="What's in this image?") -> str: | ||

|

|

||

| content = [] | ||

| queries = prompt.split("<IMG>") | ||

| img_num = 0 | ||

| for query in queries: | ||

| if query.endswith((".jpg", ".png", ".jpeg")): | ||

| image_path = Path(os.path.join(image_folder, query)) | ||

| image = { | ||

| 'mime_type': f'image/{image_path.suffix[1:].replace("jpg", "jpeg")}', | ||

| 'data': image_path.read_bytes() | ||

| } | ||

| img_num += 1 | ||

| content.append(image) | ||

| else: | ||

| content.append(query) | ||

|

|

||

| if img_num > 16: | ||

| return "" | ||

| # Query the model | ||

| text = "" | ||

| while len(text) < 1: | ||

| try: | ||

| response = self.model.generate_content( | ||

| content | ||

| ) | ||

| try: | ||

| text = response.text | ||

| except: | ||

| text = " " | ||

| except Exception as error: | ||

| print(error) | ||

| print('Sleeping for 10 seconds') | ||

| time.sleep(10) | ||

| return text.strip() | ||

|

|

||

|

|

||

| def arg_parser(): | ||

| parser = argparse.ArgumentParser() | ||

| parser.add_argument( | ||

| "--mmvetv2_path", | ||

| type=str, | ||

| default="/path/to/mm-vet", | ||

| help="Download mm-vet.zip and `unzip mm-vet.zip` and change the path here", | ||

| ) | ||

| parser.add_argument( | ||

| "--result_path", | ||

| type=str, | ||

| default="results", | ||

| ) | ||

| parser.add_argument( | ||

| "--google_api_key", type=str, default=None, | ||

| help="refer to https://ai.google.dev/tutorials/python_quickstart" | ||

| ) | ||

| parser.add_argument( | ||

| "--model_name", | ||

| type=str, | ||

| default="gemini-1.5-pro", | ||

| help="Gemini model name", | ||

| ) | ||

| args = parser.parse_args() | ||

| return args | ||

|

|

||

|

|

||

| if __name__ == "__main__": | ||

| args = arg_parser() | ||

|

|

||

| if args.google_api_key: | ||

| GOOGLE_API_KEY = args.google_api_key | ||

| else: | ||

| GOOGLE_API_KEY = os.getenv('GOOGLE_API_KEY') | ||

|

|

||

| if GOOGLE_API_KEY is None: | ||

| raise ValueError("Please set the GOOGLE_API_KEY environment variable or pass it as an argument") | ||

|

|

||

| genai.configure(api_key=GOOGLE_API_KEY) | ||

| model = Gemini(model=args.model_name) | ||

|

|

||

| evaluate_on_mmvetv2(args, model) | ||

|

|

||

|

|

Oops, something went wrong.