?

+ +Email:

+

+

+

+

+

+

+```

+

+The presentation markup hierarchy needs to be `.reveal > .slides > section` where the `section` represents one slide and can be repeated indefinitely. If you place multiple `section` elements inside of another `section` they will be shown as vertical slides. The first of the vertical slides is the "root" of the others (at the top), and will be included in the horizontal sequence. For example:

+

+```html

+

+

+

+```

+

+### Markdown

+

+It's possible to write your slides using Markdown. To enable Markdown, add the `data-markdown` attribute to your ` elements

+ { src: 'plugin/highlight/highlight.js', async: true },

+

+ // Zoom in and out with Alt+click

+ { src: 'plugin/zoom-js/zoom.js', async: true },

+

+ // Speaker notes

+ { src: 'plugin/notes/notes.js', async: true },

+

+ // MathJax

+ { src: 'plugin/math/math.js', async: true }

+ ]

+});

+```

+

+You can add your own extensions using the same syntax. The following properties are available for each dependency object:

+- **src**: Path to the script to load

+- **async**: [optional] Flags if the script should load after reveal.js has started, defaults to false

+- **callback**: [optional] Function to execute when the script has loaded

+- **condition**: [optional] Function which must return true for the script to be loaded

+

+### Ready Event

+

+A `ready` event is fired when reveal.js has loaded all non-async dependencies and is ready to start navigating. To check if reveal.js is already 'ready' you can call `Reveal.isReady()`.

+

+```javascript

+Reveal.addEventListener( 'ready', function( event ) {

+ // event.currentSlide, event.indexh, event.indexv

+} );

+```

+

+Note that we also add a `.ready` class to the `.reveal` element so that you can hook into this with CSS.

+

+### Auto-sliding

+

+Presentations can be configured to progress through slides automatically, without any user input. To enable this you will need to tell the framework how many milliseconds it should wait between slides:

+

+```javascript

+// Slide every five seconds

+Reveal.configure({

+ autoSlide: 5000

+});

+```

+

+When this is turned on a control element will appear that enables users to pause and resume auto-sliding. Alternatively, sliding can be paused or resumed by pressing »A« on the keyboard. Sliding is paused automatically as soon as the user starts navigating. You can disable these controls by specifying `autoSlideStoppable: false` in your reveal.js config.

+

+You can also override the slide duration for individual slides and fragments by using the `data-autoslide` attribute:

+

+```html

+

+ After 2 seconds the first fragment will be shown.

+ After 10 seconds the next fragment will be shown.

+ Now, the fragment is displayed for 2 seconds before the next slide is shown.

+

+```

+

+To override the method used for navigation when auto-sliding, you can specify the `autoSlideMethod` setting. To only navigate along the top layer and ignore vertical slides, set this to `Reveal.navigateRight`.

+

+Whenever the auto-slide mode is resumed or paused the `autoslideresumed` and `autoslidepaused` events are fired.

+

+### Keyboard Bindings

+

+If you're unhappy with any of the default keyboard bindings you can override them using the `keyboard` config option:

+

+```javascript

+Reveal.configure({

+ keyboard: {

+ 13: 'next', // go to the next slide when the ENTER key is pressed

+ 27: function() {}, // do something custom when ESC is pressed

+ 32: null // don't do anything when SPACE is pressed (i.e. disable a reveal.js default binding)

+ }

+});

+```

+

+### Vertical Slide Navigation

+

+Slides can be nested within other slides to create vertical stacks (see [Markup](#markup)). When presenting, you use the left/right arrows to step through the main (horizontal) slides. When you arrive at a vertical stack you can optionally press the up/down arrows to view the vertical slides or skip past them by pressing the right arrow. Here's an example showing a bird's-eye view of what this looks like in action:

+

+ +

+#### Navigation Mode

+You can finetune the reveal.js navigation behavior by using the `navigationMode` config option. Note that these options are only useful for presnetations that use a mix of horizontal and vertical slides. The following navigation modes are available:

+

+| Value | Description |

+| :--------------------------- | :---------- |

+| default | Left/right arrow keys step between horizontal slides. Up/down arrow keys step between vertical slides. Space key steps through all slides (both horizontal and vertical). |

+| linear | Removes the up/down arrows. Left/right arrows step through all slides (both horizontal and vertical). |

+| grid | When this is enabled, stepping left/right from a vertical stack to an adjacent vertical stack will land you at the same vertical index.

+

+#### Navigation Mode

+You can finetune the reveal.js navigation behavior by using the `navigationMode` config option. Note that these options are only useful for presnetations that use a mix of horizontal and vertical slides. The following navigation modes are available:

+

+| Value | Description |

+| :--------------------------- | :---------- |

+| default | Left/right arrow keys step between horizontal slides. Up/down arrow keys step between vertical slides. Space key steps through all slides (both horizontal and vertical). |

+| linear | Removes the up/down arrows. Left/right arrows step through all slides (both horizontal and vertical). |

+| grid | When this is enabled, stepping left/right from a vertical stack to an adjacent vertical stack will land you at the same vertical index.

Consider a deck with six slides ordered in two vertical stacks:

`1.1` `2.1`

`1.2` `2.2`

`1.3` `2.3`

If you're on slide 1.3 and navigate right, you will normally move from 1.3 -> 2.1. With navigationMode set to "grid" the same navigation takes you from 1.3 -> 2.3. |

+

+### Touch Navigation

+

+You can swipe to navigate through a presentation on any touch-enabled device. Horizontal swipes change between horizontal slides, vertical swipes change between vertical slides. If you wish to disable this you can set the `touch` config option to false when initializing reveal.js.

+

+If there's some part of your content that needs to remain accessible to touch events you'll need to highlight this by adding a `data-prevent-swipe` attribute to the element. One common example where this is useful is elements that need to be scrolled.

+

+### Lazy Loading

+

+When working on presentation with a lot of media or iframe content it's important to load lazily. Lazy loading means that reveal.js will only load content for the few slides nearest to the current slide. The number of slides that are preloaded is determined by the `viewDistance` configuration option.

+

+To enable lazy loading all you need to do is change your `src` attributes to `data-src` as shown below. This is supported for image, video, audio and iframe elements.

+

+```html

+

+  +

+

+

+

+

+

+```

+

+#### Lazy Loading Iframes

+

+Note that lazy loaded iframes ignore the `viewDistance` configuration and will only load when their containing slide becomes visible. Iframes are also unloaded as soon as the slide is hidden.

+

+When we lazy load a video or audio element, reveal.js won't start playing that content until the slide becomes visible. However there is no way to control this for an iframe since that could contain any kind of content. That means if we loaded an iframe before the slide is visible on screen it could begin playing media and sound in the background.

+

+You can override this behavior with the `data-preload` attribute. The iframe below will be loaded

+according to the `viewDistance`.

+

+```html

+

+

+

+```

+

+You can also change the default globally with the `preloadIframes` configuration option. If set to

+`true` ALL iframes with a `data-src` attribute will be preloaded when within the `viewDistance`

+regardless of individual `data-preload` attributes. If set to `false`, all iframes will only be

+loaded when they become visible.

+

+### API

+

+The `Reveal` object exposes a JavaScript API for controlling navigation and reading state:

+

+```javascript

+// Navigation

+Reveal.slide( indexh, indexv, indexf );

+Reveal.left();

+Reveal.right();

+Reveal.up();

+Reveal.down();

+Reveal.prev();

+Reveal.next();

+Reveal.prevFragment();

+Reveal.nextFragment();

+

+// Randomize the order of slides

+Reveal.shuffle();

+

+// Toggle presentation states, optionally pass true/false to force on/off

+Reveal.toggleOverview();

+Reveal.togglePause();

+Reveal.toggleAutoSlide();

+

+// Shows a help overlay with keyboard shortcuts, optionally pass true/false

+// to force on/off

+Reveal.toggleHelp();

+

+// Change a config value at runtime

+Reveal.configure({ controls: true });

+

+// Returns the present configuration options

+Reveal.getConfig();

+

+// Fetch the current scale of the presentation

+Reveal.getScale();

+

+// Retrieves the previous and current slide elements

+Reveal.getPreviousSlide();

+Reveal.getCurrentSlide();

+

+Reveal.getIndices(); // { h: 0, v: 0, f: 0 }

+Reveal.getSlidePastCount();

+Reveal.getProgress(); // (0 == first slide, 1 == last slide)

+Reveal.getSlides(); // Array of all slides

+Reveal.getTotalSlides(); // Total number of slides

+

+// Returns the speaker notes for the current slide

+Reveal.getSlideNotes();

+

+// State checks

+Reveal.isFirstSlide();

+Reveal.isLastSlide();

+Reveal.isOverview();

+Reveal.isPaused();

+Reveal.isAutoSliding();

+

+// Returns the top-level DOM element

+getRevealElement(); // ...

+```

+

+### Custom Key Bindings

+

+Custom key bindings can be added and removed using the following Javascript API. Custom key bindings will override the default keyboard bindings, but will in turn be overridden by the user defined bindings in the ``keyboard`` config option.

+

+```javascript

+Reveal.addKeyBinding( binding, callback );

+Reveal.removeKeyBinding( keyCode );

+```

+

+For example

+

+```javascript

+// The binding parameter provides the following properties

+// keyCode: the keycode for binding to the callback

+// key: the key label to show in the help overlay

+// description: the description of the action to show in the help overlay

+Reveal.addKeyBinding( { keyCode: 84, key: 'T', description: 'Start timer' }, function() {

+ // start timer

+} )

+

+// The binding parameter can also be a direct keycode without providing the help description

+Reveal.addKeyBinding( 82, function() {

+ // reset timer

+} )

+```

+

+This allows plugins to add key bindings directly to Reveal so they can

+

+* make use of Reveal's pre-processing logic for key handling (for example, ignoring key presses when paused); and

+* be included in the help overlay (optional)

+

+### Slide Changed Event

+

+A `slidechanged` event is fired each time the slide is changed (regardless of state). The event object holds the index values of the current slide as well as a reference to the previous and current slide HTML nodes.

+

+Some libraries, like MathJax (see [#226](https://github.com/hakimel/reveal.js/issues/226#issuecomment-10261609)), get confused by the transforms and display states of slides. Often times, this can be fixed by calling their update or render function from this callback.

+

+```javascript

+Reveal.addEventListener( 'slidechanged', function( event ) {

+ // event.previousSlide, event.currentSlide, event.indexh, event.indexv

+} );

+```

+

+### Presentation State

+

+The presentation's current state can be fetched by using the `getState` method. A state object contains all of the information required to put the presentation back as it was when `getState` was first called. Sort of like a snapshot. It's a simple object that can easily be stringified and persisted or sent over the wire.

+

+```javascript

+Reveal.slide( 1 );

+// we're on slide 1

+

+var state = Reveal.getState();

+

+Reveal.slide( 3 );

+// we're on slide 3

+

+Reveal.setState( state );

+// we're back on slide 1

+```

+

+### Slide States

+

+If you set `data-state="somestate"` on a slide ``, "somestate" will be applied as a class on the document element when that slide is opened. This allows you to apply broad style changes to the page based on the active slide.

+

+Furthermore you can also listen to these changes in state via JavaScript:

+

+```javascript

+Reveal.addEventListener( 'somestate', function() {

+ // TODO: Sprinkle magic

+}, false );

+```

+

+### Slide Backgrounds

+

+Slides are contained within a limited portion of the screen by default to allow them to fit any display and scale uniformly. You can apply full page backgrounds outside of the slide area by adding a `data-background` attribute to your `` elements. Four different types of backgrounds are supported: color, image, video and iframe.

+

+#### Color Backgrounds

+

+All CSS color formats are supported, including hex values, keywords, `rgba()` or `hsl()`.

+

+```html

+

+ Color

+

+```

+

+#### Image Backgrounds

+

+By default, background images are resized to cover the full page. Available options:

+

+| Attribute | Default | Description |

+| :------------------------------- | :--------- | :---------- |

+| data-background-image | | URL of the image to show. GIFs restart when the slide opens. |

+| data-background-size | cover | See [background-size](https://developer.mozilla.org/docs/Web/CSS/background-size) on MDN. |

+| data-background-position | center | See [background-position](https://developer.mozilla.org/docs/Web/CSS/background-position) on MDN. |

+| data-background-repeat | no-repeat | See [background-repeat](https://developer.mozilla.org/docs/Web/CSS/background-repeat) on MDN. |

+| data-background-opacity | 1 | Opacity of the background image on a 0-1 scale. 0 is transparent and 1 is fully opaque. |

+

+```html

+

+ Image

+

+

+ This background image will be sized to 100px and repeated

+

+```

+

+#### Video Backgrounds

+

+Automatically plays a full size video behind the slide.

+

+| Attribute | Default | Description |

+| :--------------------------- | :------ | :---------- |

+| data-background-video | | A single video source, or a comma separated list of video sources. |

+| data-background-video-loop | false | Flags if the video should play repeatedly. |

+| data-background-video-muted | false | Flags if the audio should be muted. |

+| data-background-size | cover | Use `cover` for full screen and some cropping or `contain` for letterboxing. |

+| data-background-opacity | 1 | Opacity of the background video on a 0-1 scale. 0 is transparent and 1 is fully opaque. |

+

+```html

+

+ Video

+

+```

+

+#### Iframe Backgrounds

+

+Embeds a web page as a slide background that covers 100% of the reveal.js width and height. The iframe is in the background layer, behind your slides, and as such it's not possible to interact with it by default. To make your background interactive, you can add the `data-background-interactive` attribute.

+

+```html

+

+ Iframe

+

+```

+

+#### Background Transitions

+

+Backgrounds transition using a fade animation by default. This can be changed to a linear sliding transition by passing `backgroundTransition: 'slide'` to the `Reveal.initialize()` call. Alternatively you can set `data-background-transition` on any section with a background to override that specific transition.

+

+

+### Parallax Background

+

+If you want to use a parallax scrolling background, set the first two properties below when initializing reveal.js (the other two are optional).

+

+```javascript

+Reveal.initialize({

+

+ // Parallax background image

+ parallaxBackgroundImage: '', // e.g. "https://s3.amazonaws.com/hakim-static/reveal-js/reveal-parallax-1.jpg"

+

+ // Parallax background size

+ parallaxBackgroundSize: '', // CSS syntax, e.g. "2100px 900px" - currently only pixels are supported (don't use % or auto)

+

+ // Number of pixels to move the parallax background per slide

+ // - Calculated automatically unless specified

+ // - Set to 0 to disable movement along an axis

+ parallaxBackgroundHorizontal: 200,

+ parallaxBackgroundVertical: 50

+

+});

+```

+

+Make sure that the background size is much bigger than screen size to allow for some scrolling. [View example](http://revealjs.com/?parallaxBackgroundImage=https%3A%2F%2Fs3.amazonaws.com%2Fhakim-static%2Freveal-js%2Freveal-parallax-1.jpg¶llaxBackgroundSize=2100px%20900px).

+

+### Slide Transitions

+

+The global presentation transition is set using the `transition` config value. You can override the global transition for a specific slide by using the `data-transition` attribute:

+

+```html

+

+ This slide will override the presentation transition and zoom!

+

+

+

+ Choose from three transition speeds: default, fast or slow!

+

+```

+

+You can also use different in and out transitions for the same slide:

+

+```html

+

+ The train goes on …

+

+

+ and on …

+

+

+ and stops.

+

+

+ (Passengers entering and leaving)

+

+

+ And it starts again.

+

+```

+You can choose from `none`, `fade`, `slide`, `convex`, `concave` and `zoom`.

+### Internal links

+

+It's easy to link between slides. The first example below targets the index of another slide whereas the second targets a slide with an ID attribute (``):

+

+```html

+Link

+Link

+```

+

+You can also add relative navigation links, similar to the built in reveal.js controls, by appending one of the following classes on any element. Note that each element is automatically given an `enabled` class when it's a valid navigation route based on the current slide.

+

+```html

+

+

+

+

+

+

+```

+

+### Fragments

+

+Fragments are used to highlight individual elements on a slide. Every element with the class `fragment` will be stepped through before moving on to the next slide. Here's an example: http://revealjs.com/#/fragments

+

+The default fragment style is to start out invisible and fade in. This style can be changed by appending a different class to the fragment:

+

+```html

+

+ grow

+ shrink

+ fade-out

+ fade-up (also down, left and right!)

+ fades in, then out when we move to the next step

+ fades in, then obfuscate when we move to the next step

+ blue only once

+ highlight-red

+ highlight-green

+ highlight-blue

+

+```

+

+Multiple fragments can be applied to the same element sequentially by wrapping it, this will fade in the text on the first step and fade it back out on the second.

+

+```html

+

+

+ I'll fade in, then out

+

+

+```

+

+The display order of fragments can be controlled using the `data-fragment-index` attribute.

+

+```html

+

+ Appears last

+ Appears first

+ Appears second

+

+```

+

+### Fragment events

+

+When a slide fragment is either shown or hidden reveal.js will dispatch an event.

+

+Some libraries, like MathJax (see #505), get confused by the initially hidden fragment elements. Often times this can be fixed by calling their update or render function from this callback.

+

+```javascript

+Reveal.addEventListener( 'fragmentshown', function( event ) {

+ // event.fragment = the fragment DOM element

+} );

+Reveal.addEventListener( 'fragmenthidden', function( event ) {

+ // event.fragment = the fragment DOM element

+} );

+```

+

+### Code Syntax Highlighting

+

+By default, Reveal is configured with [highlight.js](https://highlightjs.org/) for code syntax highlighting. To enable syntax highlighting, you'll have to load the highlight plugin ([plugin/highlight/highlight.js](plugin/highlight/highlight.js)) and a highlight.js CSS theme (Reveal comes packaged with the Monokai themes: [lib/css/monokai.css](lib/css/monokai.css)).

+

+```javascript

+Reveal.initialize({

+ // More info https://github.com/hakimel/reveal.js#dependencies

+ dependencies: [

+ { src: 'plugin/highlight/highlight.js', async: true },

+ ]

+});

+```

+

+Below is an example with clojure code that will be syntax highlighted. When the `data-trim` attribute is present, surrounding whitespace is automatically removed. HTML will be escaped by default. To avoid this, for example if you are using `` to call out a line of code, add the `data-noescape` attribute to the `` element.

+

+```html

+

+

+(def lazy-fib

+ (concat

+ [0 1]

+ ((fn rfib [a b]

+ (lazy-cons (+ a b) (rfib b (+ a b)))) 0 1)))

+

+

+```

+

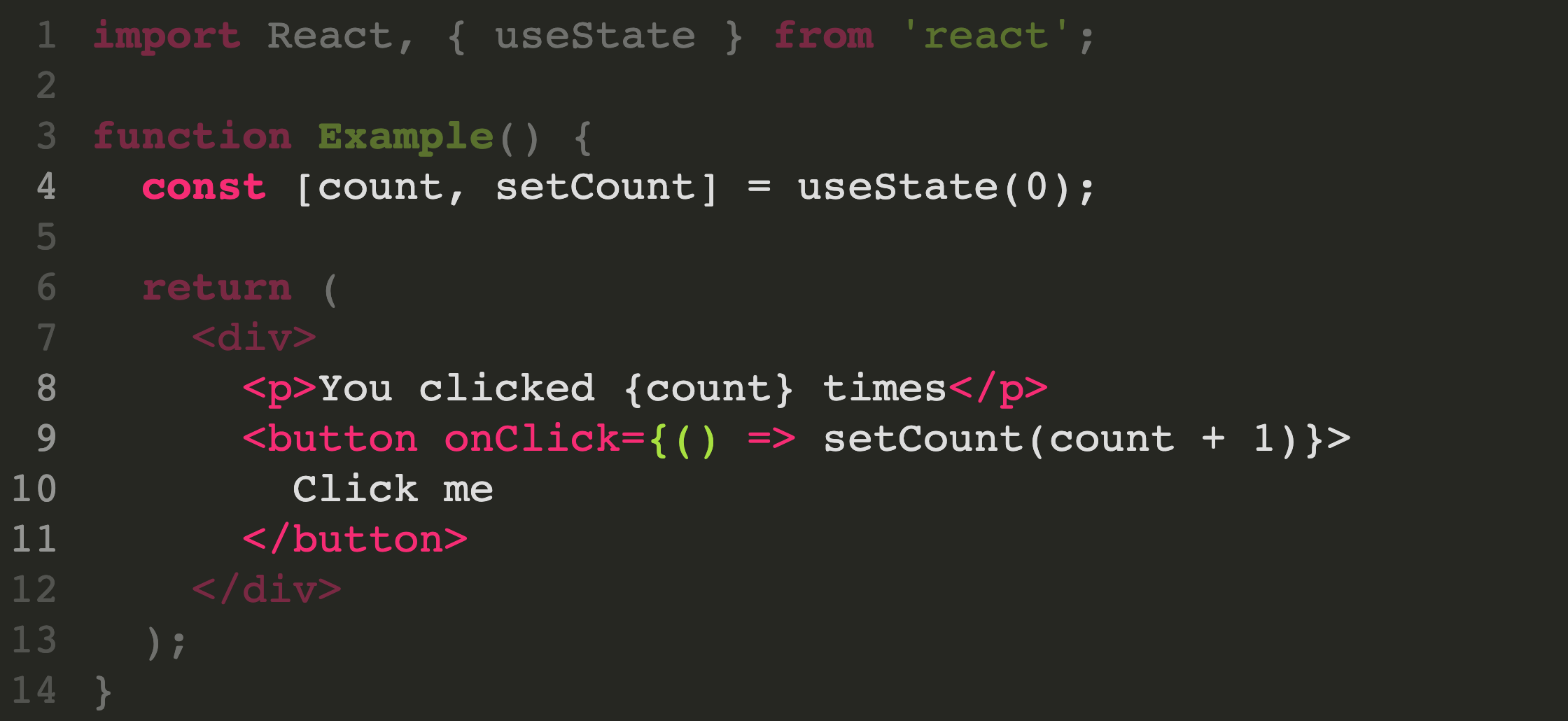

+#### Line Numbers & Highlights

+

+To enable line numbers, add `data-line-numbers` to your `` tags. If you want to highlight specific lines you can provide a comma separated list of line numbers using the same attribute. For example, in the following example lines 4 and 8-11 are highlighted:

+

+```html

+

+import React, { useState } from 'react';

+

+function Example() {

+ const [count, setCount] = useState(0);

+

+ return (

+

+ You clicked {count} times

+

+

+ );

+}

+

+```

+

+ +

+

+

+### Slide number

+

+If you would like to display the page number of the current slide you can do so using the `slideNumber` and `showSlideNumber` configuration values.

+

+```javascript

+// Shows the slide number using default formatting

+Reveal.configure({ slideNumber: true });

+

+// Slide number formatting can be configured using these variables:

+// "h.v": horizontal . vertical slide number (default)

+// "h/v": horizontal / vertical slide number

+// "c": flattened slide number

+// "c/t": flattened slide number / total slides

+Reveal.configure({ slideNumber: 'c/t' });

+

+// You can provide a function to fully customize the number:

+Reveal.configure({ slideNumber: function() {

+ // Ignore numbering of vertical slides

+ return [ Reveal.getIndices().h ];

+}});

+

+// Control which views the slide number displays on using the "showSlideNumber" value:

+// "all": show on all views (default)

+// "speaker": only show slide numbers on speaker notes view

+// "print": only show slide numbers when printing to PDF

+Reveal.configure({ showSlideNumber: 'speaker' });

+```

+

+### Overview mode

+

+Press »ESC« or »O« keys to toggle the overview mode on and off. While you're in this mode, you can still navigate between slides,

+as if you were at 1,000 feet above your presentation. The overview mode comes with a few API hooks:

+

+```javascript

+Reveal.addEventListener( 'overviewshown', function( event ) { /* ... */ } );

+Reveal.addEventListener( 'overviewhidden', function( event ) { /* ... */ } );

+

+// Toggle the overview mode programmatically

+Reveal.toggleOverview();

+```

+

+### Fullscreen mode

+

+Just press »F« on your keyboard to show your presentation in fullscreen mode. Press the »ESC« key to exit fullscreen mode.

+

+### Embedded media

+

+Add `data-autoplay` to your media element if you want it to automatically start playing when the slide is shown:

+

+```html

+

+```

+

+If you want to enable or disable autoplay globally, for all embedded media, you can use the `autoPlayMedia` configuration option. If you set this to `true` ALL media will autoplay regardless of individual `data-autoplay` attributes. If you initialize with `autoPlayMedia: false` NO media will autoplay.

+

+Note that embedded HTML5 `

+

+

+

+### Slide number

+

+If you would like to display the page number of the current slide you can do so using the `slideNumber` and `showSlideNumber` configuration values.

+

+```javascript

+// Shows the slide number using default formatting

+Reveal.configure({ slideNumber: true });

+

+// Slide number formatting can be configured using these variables:

+// "h.v": horizontal . vertical slide number (default)

+// "h/v": horizontal / vertical slide number

+// "c": flattened slide number

+// "c/t": flattened slide number / total slides

+Reveal.configure({ slideNumber: 'c/t' });

+

+// You can provide a function to fully customize the number:

+Reveal.configure({ slideNumber: function() {

+ // Ignore numbering of vertical slides

+ return [ Reveal.getIndices().h ];

+}});

+

+// Control which views the slide number displays on using the "showSlideNumber" value:

+// "all": show on all views (default)

+// "speaker": only show slide numbers on speaker notes view

+// "print": only show slide numbers when printing to PDF

+Reveal.configure({ showSlideNumber: 'speaker' });

+```

+

+### Overview mode

+

+Press »ESC« or »O« keys to toggle the overview mode on and off. While you're in this mode, you can still navigate between slides,

+as if you were at 1,000 feet above your presentation. The overview mode comes with a few API hooks:

+

+```javascript

+Reveal.addEventListener( 'overviewshown', function( event ) { /* ... */ } );

+Reveal.addEventListener( 'overviewhidden', function( event ) { /* ... */ } );

+

+// Toggle the overview mode programmatically

+Reveal.toggleOverview();

+```

+

+### Fullscreen mode

+

+Just press »F« on your keyboard to show your presentation in fullscreen mode. Press the »ESC« key to exit fullscreen mode.

+

+### Embedded media

+

+Add `data-autoplay` to your media element if you want it to automatically start playing when the slide is shown:

+

+```html

+

+```

+

+If you want to enable or disable autoplay globally, for all embedded media, you can use the `autoPlayMedia` configuration option. If you set this to `true` ALL media will autoplay regardless of individual `data-autoplay` attributes. If you initialize with `autoPlayMedia: false` NO media will autoplay.

+

+Note that embedded HTML5 `  +

+

+Presentation at SenseCamp 2019 hosted by FORCE Technology Senselab.

+Slides: [web](https://jonnor.github.io/machinehearing/pycode2019/slides.html),

+[.PDF](./sensecamp2019/slides.pdf)

+

## NMBU lecture on Audio Classification

diff --git a/sensecamp2019/Makefile b/sensecamp2019/Makefile

new file mode 100644

index 0000000..cee7a60

--- /dev/null

+++ b/sensecamp2019/Makefile

@@ -0,0 +1,6 @@

+

+slides:

+ pandoc -t revealjs -s presentation.md -o slides.html --slide-level=2 --mathml -V theme=white

+

+slides.pdf:

+ pandoc -t beamer -s presentation.md -o slides.pdf --slide-level=2 --mathml

diff --git a/sensecamp2019/README.md b/sensecamp2019/README.md

new file mode 100644

index 0000000..84652a0

--- /dev/null

+++ b/sensecamp2019/README.md

@@ -0,0 +1,216 @@

+

+# Context

+

+https://forcetechnology.com/en/events/2019/sensecamp-2019

+"Opportunities with Machine Learning in audio”

+

+

+09.45 - 10.25 Deep audio - data, representations and interactivity

+Lars Kai Hansen, Professor, DTU Compute - Technical University of Denmark

+10.25 - 11.05 On applying AI/ML in Audiology/Hearing aids

+Jens Brehm Bagger Nielsen, Architect, Machine Learning & Data Science, Widex

+11.05 - 11.45 Data-driven services in hearing health care

+Niels H. Pontoppidan, Research Area Manager, Augmented Hearing Science - Eriksholm Research Center

+

+

+## Format

+

+30 minutes, 10 minutes QA

+

+# TODO

+

+- Add some Research Projects at the end

+

+Pretty

+

+- Add Soundsensing logo to frontpage

+- Add Soundsensing logo to ending page

+- Add Soundensing logo at bottom of each page

+

+

+# Goals

+

+From our POV

+

+1. Attract partners for Soundsensing

+Research institutes. Public or private.

+Joint technology development?

+2. Attract pilot projects for Soundsensing

+(3. Attract contacts for consulting on ML+audio+embedded )

+

+From audience POV

+

+> you as audio proffesionals, understand:

+>

+> possibilities of on-sensor ML

+>

+> how Soundsensing applies this to Noise Monitoring

+

+> basics of machine learning for audio

+

+

+

+## Partnerships

+

+Research

+

+What do we want to get out of a partnership?

+How can someone be of benefit to us?

+

+- Provide funding from their existing R&D project budgets

+- Provide resources (students etc) to work on our challenges

+- Help secure funding in joint project

+

+

+

+## Calls to action

+

+1-2 Data Science students in Spring 2020.

+

+Looking for pilot projects for Autumn 2020 (or maybe spring).

+

+Interested in machine learning (for audio) on embedded devices?

+Come talk to me!

+Send email.

+

+

+Presentation at SenseCamp 2019 hosted by FORCE Technology Senselab.

+Slides: [web](https://jonnor.github.io/machinehearing/pycode2019/slides.html),

+[.PDF](./sensecamp2019/slides.pdf)

+

## NMBU lecture on Audio Classification

diff --git a/sensecamp2019/Makefile b/sensecamp2019/Makefile

new file mode 100644

index 0000000..cee7a60

--- /dev/null

+++ b/sensecamp2019/Makefile

@@ -0,0 +1,6 @@

+

+slides:

+ pandoc -t revealjs -s presentation.md -o slides.html --slide-level=2 --mathml -V theme=white

+

+slides.pdf:

+ pandoc -t beamer -s presentation.md -o slides.pdf --slide-level=2 --mathml

diff --git a/sensecamp2019/README.md b/sensecamp2019/README.md

new file mode 100644

index 0000000..84652a0

--- /dev/null

+++ b/sensecamp2019/README.md

@@ -0,0 +1,216 @@

+

+# Context

+

+https://forcetechnology.com/en/events/2019/sensecamp-2019

+"Opportunities with Machine Learning in audio”

+

+

+09.45 - 10.25 Deep audio - data, representations and interactivity

+Lars Kai Hansen, Professor, DTU Compute - Technical University of Denmark

+10.25 - 11.05 On applying AI/ML in Audiology/Hearing aids

+Jens Brehm Bagger Nielsen, Architect, Machine Learning & Data Science, Widex

+11.05 - 11.45 Data-driven services in hearing health care

+Niels H. Pontoppidan, Research Area Manager, Augmented Hearing Science - Eriksholm Research Center

+

+

+## Format

+

+30 minutes, 10 minutes QA

+

+# TODO

+

+- Add some Research Projects at the end

+

+Pretty

+

+- Add Soundsensing logo to frontpage

+- Add Soundsensing logo to ending page

+- Add Soundensing logo at bottom of each page

+

+

+# Goals

+

+From our POV

+

+1. Attract partners for Soundsensing

+Research institutes. Public or private.

+Joint technology development?

+2. Attract pilot projects for Soundsensing

+(3. Attract contacts for consulting on ML+audio+embedded )

+

+From audience POV

+

+> you as audio proffesionals, understand:

+>

+> possibilities of on-sensor ML

+>

+> how Soundsensing applies this to Noise Monitoring

+

+> basics of machine learning for audio

+

+

+

+## Partnerships

+

+Research

+

+What do we want to get out of a partnership?

+How can someone be of benefit to us?

+

+- Provide funding from their existing R&D project budgets

+- Provide resources (students etc) to work on our challenges

+- Help secure funding in joint project

+

+

+

+## Calls to action

+

+1-2 Data Science students in Spring 2020.

+

+Looking for pilot projects for Autumn 2020 (or maybe spring).

+

+Interested in machine learning (for audio) on embedded devices?

+Come talk to me!

+Send email.